Speaker 1 (00:00):

Ready, go.

Speaker 2 (17:41):

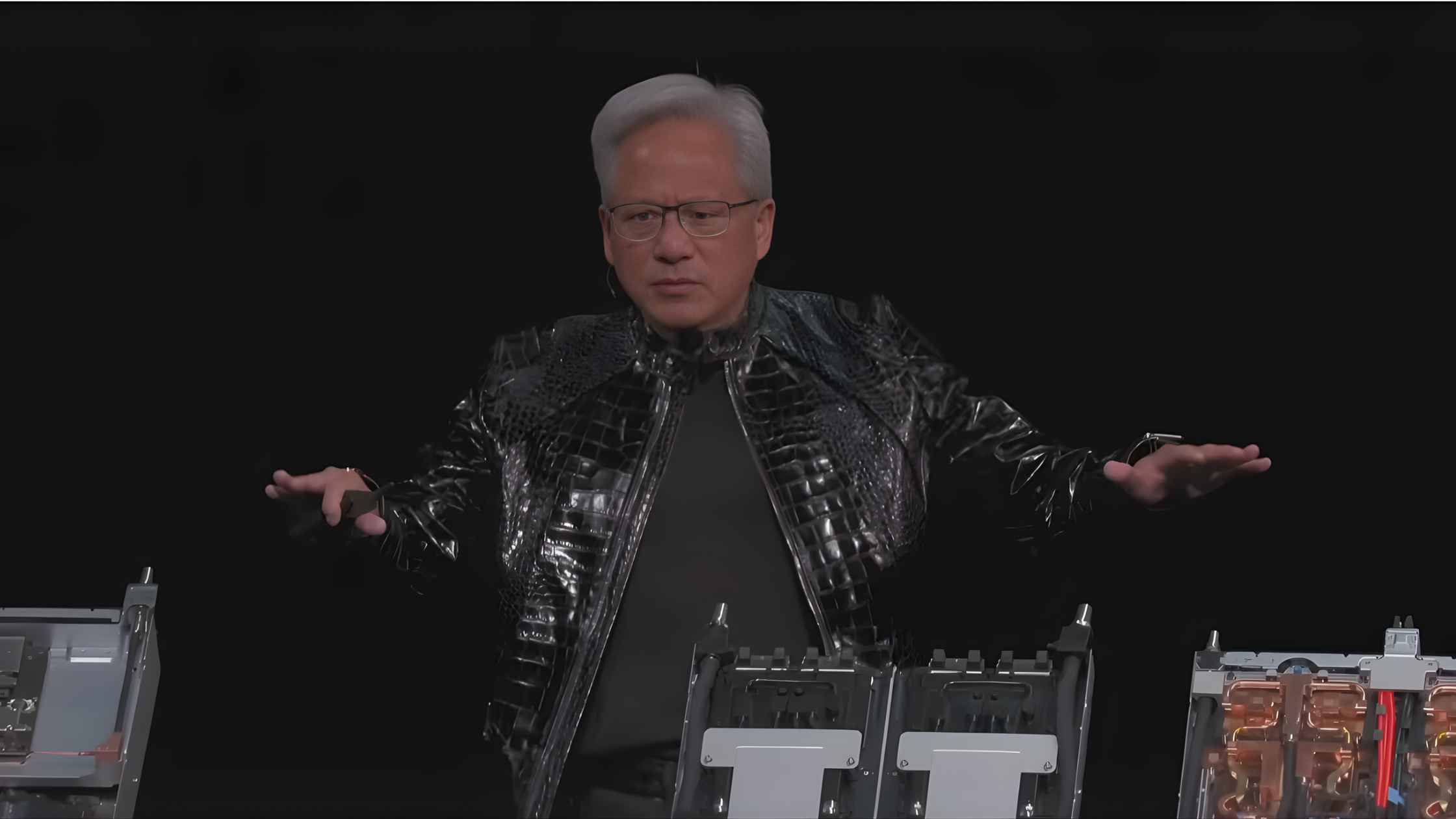

Welcome to the stage, NVIDIA founder and CEO, Jensen Huang.

Jensen Huang (17:55):

Hello, Las Vegas. Happy New Year. Welcome to CES. Well, we have about 15 keynotes worth of material to pack in here. I'm so happy to see all of you. You got 3,000 people in this auditorium. There's 2,000 people in a courtyard watching us. There's another 1,000 people apparently in the fourth floor where they're supposed to be NVIDIA show floors, all watching this keynote. Of course, millions around the world are going to be watching this to kick off this new year.

(18:27)

Well, every 10 to 15 years, the computer industry resets. A new platform shift happens. From mainframe to PC, PC to internet, internet to cloud, cloud to mobile. Each time the world of applications target a new platform. That's why it's called a platform shift. You write new applications for a new computer. Except this time, there are two simultaneous platform shifts, in fact, happening at the same time.

(19:03)

While we now move to AI, applications are now going to be built on top of AI. At first, people thought AIs are applications, and in fact, AIs are applications, but you're going to build applications on top of AIs. In addition to that, how you run the software, how you develop the software, fundamentally changed. The entire fibulary stack of the computer industry is being reinvented. You no longer program the software, you train the software. You don't run it on CPUs, you run it on GPUs.

(19:43)

Whereas applications were prerecorded, pre-compiled and run on your device, now applications understand the context and generate every single pixel, every single token, completely from scratch, every single time. Computing has been fundamentally reshaped as a result of accelerated computing, as a result of artificial intelligence. Every single layer of that five layer cake is now being reinvented.

(20:14)

Well, what that means is some $10 trillion or so of the last decade of computing is now being modernized to this new way of doing computing. What that means is hundreds of billions of dollars, a couple of hundred billion dollars in VC funding each year is going into modernize and inventing this new world. What it means is a hundred trillion dollars of industry, several percent of which is R&D budget is shifting over to artificial intelligence.

(20:45)

People ask, "Where is the money coming from?" That's where the money's coming from. The modernization of AI to AI, the shifting of R&D budgets from classical methods to now artificial intelligence methods and enormous amounts of investments coming into this industry, which explains why we're so busy. This last year was no difference. This last year was incredible. This last year … There's a slide coming.

(21:16)

This is what happens when you don't practice. It's the first keynote of the year. I hope it's your first keynote of the year, otherwise you have been pretty busy. This is our first keynote of the year. We're going to get the spiderwebs out. So 2025 was an incredible year. It seemed like everything was happening all at the same time, and in fact, it probably was. The first thing, of course, is scaling laws.

(21:44)

In 2015, the first language model that I thought was really going to make a difference, made a huge difference. It was called BERT. 2017, transformers came. It wasn't until five years later, 2022, that ChatGPT moment happened and it awakened the world to the possibilities of artificial intelligence. Something very important happened a year after that. The first O1 model from ChatGPT, the first reasoning model, completely revolutionary, invented this idea called test time scaling, which is a very commonsensical thing.

(22:24)

Not only did we pre-train a model to learn, we post-train it with reinforcement learning so that it could learn skills. Now we also have test time scaling, which is another way of saying thinking. You think in real time. Each one of these phases of artificial intelligence requires enormous amount of compute, and the computing law continues to scale. Large language models continue to get better. Meanwhile, another breakthrough happened, and this breakthrough happened in 2024.

(22:55)

Agentic systems started to emerge. In 2025, it started to proliferate just about everywhere. Agentic models that have the ability to reason, look up information, do research, use tools, plan futures, simulate outcomes, all of a sudden started to solve very, very important problems. One of my favorite agentic models is called Cursor, which revolutionized the way we do software programming at NVIDIA. Agentic systems are going to really take off from here.

(23:29)

Of course, there were other types of AI. We know that large language models isn't the only type of information. Wherever the universe has information, wherever the universe has structure, we could teach a large language model, a form of language model to go understand that information, to understand its representation and to turn that into an AI. One of the biggest, most important one is physical AI. AIs that understand the laws of nature.

(23:58)

Then of course, physical AI is about AI's interacting with the world, but the world itself has information, encoded information, and that's called AI physics. In the case of physical AI, you have AI that interacts with the physical world, and you have AI physics, AI that understands the laws of physics.

(24:19)

Then lastly, one of the most important things that happened last year, the advancement of open models. We can now know that AI is going to proliferate everywhere when open source, when open innovation, when innovation across every single company and every industry around the world is activated at the same time. Open models really took off last year.

(24:43)

In fact, last year we saw the advance of DeepSeek R1, the first open model that's a reasoning system. It caught the world by surprise and it activated literally this entire movement. Really, really exciting work. We're so happy with it. Now we have open model systems all over the world of all different kinds, and we now know that open models have also reached the frontier.

(25:17)

Still solidly is six months behind the frontier models, but every single six months, a new model's emerging and these models are getting smarter and smarter. Because of that, you could see the number of downloads has exploded. The number of downloads is growing so fast because startups want to participate in the AI revolution. Large companies want to, researchers want to, students want to. Just about every single country wants to. How is it possible that intelligence, the digital form of intelligence will leave anyone behind?

(25:54)

So open models has really revolutionized artificial intelligence last year. This entire industry is going to be reshaped as a result of that. Now, we had this inkling some time ago. You might have heard that several years ago, we just started to build and operate our own AI supercomputers. We call them DGX clouds. A lot of people asked, "Are you going into the cloud business?" The answer is no. We're building these DGX supercomputers for our own use.

(26:25)

Well, it turns out we have billions of dollars of supercomputers in operation so that we could develop our open models. I am so pleased with the work that we're doing. It is starting to attract attention all over the world and all over the industries because we are doing frontier AI model work in so many different domains.

(26:46)

The work that we did in proteins, in digital biology, La-Proteina to be able to synthesize and generate proteins. OpenFold3 to understand the structure of proteins. EVO 2, how to understand and generate multiple proteins, otherwise the beginnings of cellular representation. Earth-2, AI that understands laws of physics, the work that we did with ForeCastNet, the work that we did with Corrdiff really revolutionized the way that people are doing weather prediction.

(27:21)

Nemotron. We're now doing groundbreaking work there. The first hybrid transformer SSM model that's incredibly fast and therefore can think for a very long time or can think very quickly, for not a very long time and produce very, very smart intelligent answers. Nemotron 3 is groundbreaking work, and you can expect us to deliver other versions of Nemotron 3 in the near future.

(27:47)

Cosmos, a frontier, open world foundation model, one that understand how the world works. GROOT, a humanoid robotic system, articulation, mobility,

Jensen Huang (28:00):

… mobility, locomotion. These models, these technologies are now being integrated and in each one of these cases, open to the world, frontier human and robotics models open to the world. And then today we're going to talk a little bit about Alpamayo, the work that we've been doing in self-driving cars. Not only do we open source the models, we also open source the data that we use to train those models because in that way, only in that way can you truly trust how the models came to be. We open source all the models. We help you make derivatives from them. We have a whole suite of libraries. We call the NeMo libraries, PhysicsNeMo libraries, and the Clara, Nemo libraries, each BioNeMo libraries. Each one of these libraries are lifecycle management systems of AIs so that you could process the data, you could generate data, you could train the model, you could create the model, evaluate the model, guardrail the model, all the way to deploying the model. Each one of these libraries are incredibly complex and all of it is open sourced.

(29:05)

So, now on top of this platform, NVIDIA is a frontier AI model builder and we build it in a very special way. We build it completely in the open so that we can enable every company, every industry, every country to be part of this AI revolution. I'm incredibly proud of the work that we're doing there. In fact, if you notice the charts, the chart shows that our contribution to this industry is bar none, and you're going to see us, in fact, continue to do that, if not accelerate. These models are also world-class. All systems are down. This never happens in Santa Clara. Is it because of Las Vegas? Somebody must have won a jackpot outside. All systems are down. Okay. I think my system's still down, but that's okay. I'll make it up as I go.

(30:19)

Not only are these models frontier capable, not only are they open, they're also top the leaderboards. This is an area where we're very proud. They top leaderboards and intelligence. We have important models that understand multi-modality documents, otherwise known as PDFs. The most valuable content in the world are captured in PDFs, but it takes artificial intelligence to find out what's inside, interpret what's inside and help you read it. So our PDF retrievers, our PDF parsers are world-class. Our speech recognition models, absolutely world-class. Our retrieval models, basically search, semantic search, AI search, the database engine of the modern AI era, world-class. So we're on top of leaderboards constantly. This is an area we're very proud of, and all of that is in service of your ability to build AI agents. This is really a groundbreaking area of development.

(31:24)

At first, when ChatGPT came out, people said, "Gosh, it produced really interesting results, but it hallucinated greatly." The reason why it hallucinated, of course, it could memorize everything in the past, but it can't memorize everything in the future and the current, and so it needs to be grounded in research. It has to do fundamental research before it answers a question. The ability to reason about do I have to do research? Do I have to use tools? How do I break up a problem into steps? Each one of these steps, something that the AI model knows how to do, and together it is able to compose it into a sequence of steps to perform something it's never done before, never been trained to do. This is the wonderful capability of reasoning. We can encounter a circumstance we've never seen before and break it down into circumstances and knowledge or rules that we know how to do because we've experienced it in the past. So the ability for AI models now to be able to reason, incredibly powerful.

(32:28)

The reasoning capability of agents opened the doors to all of these different applications. We no longer have to train an AI model to know everything on day one, just as we don't have to know everything on day one, that we should be able to, in every circumstance, reason about how to solve that problem. Large language models has now made this fundamental leap. The ability to use reinforcement learning and chain of thought and search and planning and all these different techniques and reinforcement learning has made it possible for us to have this basic capability and it's also now completely open sourced.

(33:04)

But the thing that's really terrific is another breakthrough that happened and the first time I saw it was with Aravind's Perplexity. Perplexity, the search company, the AI search company, really innovative company. The first time I realized they were using multiple models at the same time, I thought it was completely genius. Of course, we would do that. Of course, an AI would also call upon all of the world's great AIs to solve the problem it wants to solve at any part of the reasoning chain. This is the reason why AIs are really multimodal, meaning they understand speech and images and text and videos and 3D graphics and proteins. It's multimodal.

(33:52)

It's also multi-model, meaning that they should be able to use any model that best fits the task. It is multi-cloud by definition, therefore, because these AI models are sitting in all these different places, and it also is hybrid cloud, because if you're an enterprise company or you've built a robot or whatever that device is, sometimes it's at the edge, sometimes a radio cell tower. Maybe sometimes it's in an enterprise or maybe it's a place where a hospital where you need to have the data in real time right next to you. Whatever those applications are, we know now this is what an AI application looks like in the future. Or another way to think about that, because future applications are built on AIs, this is the basic framework of future applications. This basic framework, this basic structure of agentic AIs that could do the things that I'm talking about that is multi-model has now turbocharged AI startups of all kinds. Because of all of the open models and all the tools that we've provided you, you could also customize your AIs to teach your AI skills that nobody else is teaching. Nobody else is causing their AI to become intelligence or smart in that way. You could do it for yourself. That's the work that we do with Nemotron, NeMo, and all of the things that we do with open models is intended to do. You put a smart router in front of it, and that router is essentially a manager that decides which one of the task based on the intention of the prompts that you give it, which one of the models is best fit for that application, for that solving that problem.

(35:41)

Okay. So now when you think about this architecture, what do you have? When you think about this architecture, all of a sudden you have an AI that's on the one hand, completely customizable by you, something that you could teach to do your own very skills for your company, something that's domain secret, something where you have deep domain expertise. Maybe you've got all of the data that you need to train that AI model. On the other hand, your AI is always at the frontier by definition. You're always at the frontier on the one hand. You're always customized on the other hand and it should just run. So we thought we would make the simplest of examples to make it available to you. This entire framework we call a blueprint, and we have blueprints that are integrated into enterprise SaaS platforms all over the world, and we're really pleased with the progress. But what we do is show you a short example of something that anybody can do.

Video (36:40):

Let's build a personal assistant. I want it to help me with my calendar, emails, to-do lists, and even keep an eye on my home. I use Brev to turn my DGX Spark into a personal cloud, so I can use the same interface whether I'm using a cloud GPU or a DGX Spark. I use a frontier model API to easily get started. I want it to help me with my emails so I create an email tool for my agent to call. I want my emails to stay private, so I'll add an open model that's running locally on the Spark. Now for any job, I want the agent to use the right model for the right task, so I'll use an intent-based model router. This way, prompts that neat email will stay on my Spark and everything else can call the frontier model. I want my assistant to interact with my world, so I'll hook it up to Hugging Face's Reachy Mini Robot. My agent controls the head, ears, and camera of the Reachy with tool calls. I want to give Reachy a voice and I really like ElevenLabs, so I'll hook up their API.

Reachy (37:55):

Hi, I'm Reachy, running on DGX Spark.

Video (37:58):

Hey, Reachy, what's on my to do list today?

Reachy (38:00):

Your to-do list today, grab groceries, eggs, milk, butter, and send Jensen the new script.

Video (38:09):

Okay. Let's send Jensen that update. Tell him we'll have it for him by the end of the day.

Reachy (38:14):

Will do.

Video (38:15):

Reachy, there's a sketch too. Can you turn it into an architectural rendering?

Reachy (38:19):

Sure.

Video (38:28):

Nice. Now make a video and show me around the room.

Reachy (38:34):

Here you go.

Video (38:35):

That's great. With Brev, I can share access to my Spark and Reachy, so I'm going to share it with Ana.

Ana (38:44):

Hey, Reachy, what's Potato up to?

Reachy (38:48):

He's on the couch. I remember you don't like this. I'll tell him to get off. Potato, off the couch.

Video (38:56):

With all the progress in open source, it's incredible to see what you can build. I'd love to see what you create.

Jensen Huang (39:07):

Isn't that incredible? Now, the amazing thing is that is utterly trivial now. That is utterly trivial now, and yet, just a couple of years ago, all of that would've been impossible. Absolutely unimaginable. Well, this basic framework, this basic way of building applications using language models, using language models, using language models, using language models that are pre-trained and they're proprietary, they're frontier, combine it with customized language models into a agentic framework, a reasoning framework that allows you to access tools and files, and maybe even connect to other agents, this is basically the architecture of AI applications or applications in the modern age, and the ability for us to create these applications are incredibly fast.

(40:14)

Notice, if you give it this application information that it's never seen before or in a structure that is not represented exactly as you thought, it can still reason through it and make its best effort to reason through the data, the information to try to understand how to solve the problem, artificial intelligence. Okay. So this basic framework is now being integrated and everything that I just described, we have the benefit of working with some of the world's leading enterprise platform companies. Palantir, for example, their entire AI and data processing platform is being integrated and accelerated by NVIDIA today. ServiceNow, the world's leading customer service and employee service platform, Snowflake, the world's top data platform in the cloud, incredible work that is being done there. CodeRabbit, we're using CodeRabbit all over NVIDIA. CrowdStrike creating AIs to detect defined AI threats.

(41:24)

NetApp, their data platform now has NVIDIA semantic AI on top of it and agentic system on top of it for them to do customer service. But the important thing is this, not only is this the way that you develop applications now, this is going to be the user interface of your platform. So whether it's Palantir or ServiceNow or Snowflake and many other companies that we're working with, the agentic system is the interface. It's no longer Excel with a bunch of squares that you enter information into. Maybe it's no longer could just command line. All of that multi-modality information is now possible, and the way you interact with your platform is much more, well, if you will, simple like you're interacting with people. So that's enterprise AI being revolutionized by agentic systems.

(42:22)

The next thing is physical AI. This is an area that you've seen me talk about for several years. In fact, we've been working on this for eight years. The question is how do you take something that is intelligent inside a computer and interacts with you with screens and speakers to something that can interact with the world? Meaning it can understand the common sense of how the world works. Object permanence, if I look away and I look back, that object is still there. Causality, if I push it tips over. It understands friction and gravity. It understands inertia, that a heavy truck rolling down the road is going to need a little bit more time to stop, that a ball is going to keep on rolling.

(43:10)

These ideas are common sense to even a little child, but for AI, it's completely unknown. So we have to create a system that allows AIs to learn the common sense of the physical world, learn its laws, but also to be able to, of course, learn from data and the data is quite scarce and to be able to evaluate whether that AI is working, meaning it has to simulate in an environment. How does an AI know that the actions that it's performing is consistent with what it should do if it doesn't have the ability to simulate the response of the physical world back on its actions? The response of its actions is really important to simulate. Otherwise, there's no way to evaluate it. It's different every time.

(43:59)

So, this basic system requires three computers. One computer, of course, the one that we know that NVIDIA builds for training the AI models. Another computer that we know is to inference the models. Inferencing the model is essentially a robotics computer that runs in the car or runs in a robot or runs in a factory, runs anywhere at the edge. But there has to be another computer that's designed for simulation, and simulation is at the heart of almost everything NVIDIA does. This is where we are most comfortable. Simulation was really the foundations of almost everything that we've done with physical AI. So we have three computers and multiple stacks that run on these computers, these libraries to make them useful. Omniverse is our digital twin physically based simulation world. Cosmos, as I mentioned earlier, is our foundation model, not a foundation model for language, but a foundation model of the world and is also aligned with language.

(45:05)

You could say something like, "What's happening to the ball?" and they'll tell you the ball's rolling down the street. So, a world foundation model, and then of course the robotics models. We have two of them. One of them is called GR00T. The other one's called Alpamayo that I'm going to tell you about. Now, one of the most important things that we have to do with physical AI is to create the data to train the AI in the first place. Where does that data come from? Rather than instead of having languages, because we created a bunch of texts that are what we consider ground truth that the AI can learn from, how do we teach an AI the ground truth of physics? There are lots and lots of videos, but hardly enough to capture the diversity and the type of interactions that we need. So this is where great minds came together and transformed what used to be compute into data.

(46:02)

Now, using synthetic data generation that is grounded and conditioned by the laws of physics, grounded and conditioned by ground truth, we can now selectively, cleverly generate data that we can then use to train the AI. So for example, what comes into this AI, this Cosmos AI world model on the left over here is the output of a traffic simulator. Now, this traffic simulator is hardly enough for an AI to learn from. We can take this, put it into a Cosmos foundation model and generate surround video that is physically based and physically plausible that the AI can now learn from, and there are so many examples of this. Let me show you what Cosmos can do.

Speaker 3 (46:54):

The ChatGPT moment for physical AI is nearly here, but the challenge is clear. The physical world is diverse and unpredictable. Collecting real world training data is slow and costly, and it's never enough. The answer is synthetic data. It starts with NVIDIA Cosmos, an open frontier world foundation model for physical AI, pre-trained on internet scale video, real driving and robotics data, and 3D simulation. Cosmos learned a unified representation of the world, able to align language, images, 3D, and action. It performs physical AI skills like generation, reasoning, and trajectory prediction. From a single image, Cosmos generates realistic video. From 3D scene descriptions, physically coherent motion, from driving telemetry and sensor logs, surround video, from planning simulators, multi-camera environments, or from scenario prompts, it brings edge cases to life. Developers can run interactive closed loop simulations in Cosmos. When actions are made, the world responds. Cosmos reasons. It analyzes edge scenarios, breaks them down into familiar physical interactions and reasons about what could happen next. Cosmos turns compute into data, training AVs for the long tail and robots how to adapt for every scenario.

Jensen Huang (49:06):

I know. It's incredible. Cosmos is the world's leading foundation model, world foundation model. It's been downloaded millions of times, used all over the world, getting the world ready for this new era of physical AI. We use it ourselves as well. We use it ourselves to create our self-driving car. Using it for scenario generation and using it for evaluation, we could have something that allows us to effectively travel billions, trillions of miles, but doing it inside a computer, and we've made enormous progress.

(49:48)

Today, we're announcing Alpamayo, the world's first thinking, reasoning autonomous vehicle AI. Alpamayo is trained end to end, literally from camera in to actuation out. The camera in, lots and lots of miles that are driven by itself, where we, human, drive it, using human demonstration, and we have lots and lots of miles that are generated by Cosmos. In addition to that, hundreds of thousands of examples are labeled very, very carefully so that we could teach the car how to drive. Alpamayo does something that's really special. Not only does it take sensor input and activates steering wheel, brakes and acceleration, it also reasons about what action it is about to take. It tells you what action it's going to take, the reasons by which it came about that action, and then of course, the trajectory.

(50:57)

All of these are coupled directly and trained very specifically by a large combination of human trained and as well as Cosmos generated data. The result of it is just really incredible. Not only does your car drive as you would expect it to drive, and it drives so naturally because it learned directly from human demonstrators, but in every single scenario, when it comes up to the scenario, it tells you what it's going to do and it reasons about what it's about to do.

(51:28)

Now, the reason why this is so important is because of the long tail of driving. It's impossible for us to simply collect every single possible scenario for everything that could ever happen in every single country, in every single circumstance that's possibly ever going to happen for all the population. However, it's very likely that every scenario, if decomposed into a whole bunch of other smaller scenarios are quite normal for you to understand. So these long tails will be decomposed into quite normal circumstances that the car knows how to deal with. It just needs to reason about it. So, let's take a look. Everything you're about to see is one shot. It's no hands.

Speaker 4 (52:19):

Routing to your destination. Buckle up. You have arrived.

Jensen Huang (55:10):

We started working on self-driving cars eight years ago, and the reason for that is because we reason early on that deep learning and artificial intelligence was going to reinvent the entire computing stack. If we were ever going to understand how to navigate ourselves and how to guide the industry towards this new future, we have to get good at building the entire stack. Well, as I mentioned earlier, AI is a five-layer cake. The lowest layer is land, power and shell. In the case of robotics, the lowest layer is the car. The next layer above it is chips, GPUs, networking chips, CPUs, all that kind of stuff. The next layer above that is the infrastructure. That infrastructure in this particular case, as I mentioned with physical AI, is Omniverse and Cosmos,

Jensen Huang (56:00):

And then above that are the models. And in the case of the models above that I've just shown you, the model here is called Alpamayo, and Alpamayo today is open sourced. This incredible body of work, it took several thousand people. Our AV team is several thousand people, just to put in perspective. Our partner, Ola… I think Ola's here in the audience somewhere. Mercedes agreed to partner with us five years ago to go make all of this possible. We imagine that someday, a billion cars on a road will all be autonomous. You could either have it be a robotaxi that you're orchestrating and renting from somebody, or you could own it and it's driving by itself, or you could decide to drive for yourself, but every single car will have autonomous vehicle capability. Every single car will be AI-powered. And so the model layer in this case is Alpamayo, and the application above that is the Mercedes-Benz.

(57:08)

And so this entire stack is our first NVIDIA first entire stack endeavor. And we've been working on it for this entire time, and I'm just so happy that the first AV car from NVIDIA is going to be on the road in Q1, and then it goes Europe in Q2. Here in the United States in Q1, then Europe in Q2, and I think it's Asia in Q3 and Q4. And the powerful thing is that we're going to keep on updating it with next versions of Alpamayo and versions after that. There's no question in my mind now that this is going to be one of the largest robotics industries, and I'm so happy that we worked on it. And it taught us enormous amount about how to help the rest of the world build robotic systems, that deep understanding in knowing how to build it ourselves, building the entire infrastructure ourselves, and knowing what kind of chips a robotic system would need.

(58:03)

In this particular case, dual Orins, the next generation, dual Thors. These processors are designed for robotic systems, and was designed for the highest level of safety capability. This car just got rated. It just went to production. The Mercedes-Benz CLA was just rated by NCAP the world's safest car. It is the only system that I know that has every single line of code, the chip, the system, every line of code safety certified. The entire model system is based on a… Sensors are diverse and redundant, and so is the soft driving car stack. The Alpamayo stack is trained end to end, and has incredible skills. However, nobody knows until you drive it forever that it's going to be perfectly safe. And so the way we guardrail that is with another software stack, an entire AV stack underneath. That entire AV stack is built to be fully traceable. And it's taken us some five years to build that, some six, seven years actually, to build that second stack.

(59:21)

These two software stacks are mirroring each other. And then we have a policy and safety evaluator decide, is this something that I'm very confident and can reason about driving very safely? If so, I'm going to have Alpamayo do it. If it's a circumstance that I'm not very confident in, and the safety policy evaluator decide that we're going to go back to a simpler, safer guardrail system, then it goes back to the classical AV stack. We're the only car in the world with both of these AV stacks running, and all safety systems should have diversity and redundancy. Well, our vision is that someday, every single car, every single truck will be autonomous, and we've been working towards that future. This entire stack is vertically integrated, of course, in the case of Mercedes-Benz. We built the entire stack together. We're going to deploy the car. We're going to operate the stack. We're going to maintain the stack for as long as we shall live.

(01:00:12)

However, like everything else we do as a company, we build the entire stack, but the entire stack is open for the ecosystem. And the ecosystem working with us to build L4 and robotaxis is expanding, and it's going everywhere. I fully expect this to be… Well, this is already a giant business for us. It's a giant business for us because they use it for training data, processing data, and training their models. They use it for synthetic data generation. In some cases, in some companies, they pretty much just build the computers, the chips that are inside the car. And some companies work with us full stack. Some companies work with us some partial part of that. So it doesn't matter how much you decide to use. My only request is use a little bit of NVIDIA wherever you can, but the entire thing is open.

(01:01:08)

Now, this is going to be the first large-scale mainstream AI, physical AI, market. And this is now, I think we can all agree, fully here. And this inflection point of going from not autonomous vehicles to autonomous vehicles is probably happening right about this time. In the next 10 years, I'm fairly certain a very, very large percentage of the world's cars will be autonomous or highly autonomous. But this basic technique that I just described in using the three computers, using the synthetic data generation, and simulation applies to every form of robotic systems. It could be a robot that is just an articulator, a manipulator. Maybe it's a mobile robot, maybe it's a fully humanoid robot.

(01:01:56)

And so the next journey, the next era for robotic systems is going to be robots, and these robots are going to come in all kinds of different sizes. And I invited some friends. Did they come? Hey, guys. Hurry up. I got a lot of stuff to cover. Come on. Hurry. Did you tell R2-D2 you're going to be here? Did you? And C-3PO? Okay. All right. Come here. Now, one of the things that's really… You have Jetsons. They have little Jetson computers inside them. They're trained inside Omniverse. And how about this? Let's show everybody the simulator that you guys learned how to be robots in. You guys want to look at that? Okay, let's look at that. Run it, please.

(01:02:59)

Isn't it amazing? That's how you learned to be a robot. You did it all inside Omniverse. And the robot simulator is called Isaac, Isaac Sim and Isaac Lab. And anybody who wants to build a robot… Nobody's going to be as cute as you, but now we have all… Look at all these friends that we have building robots. We're building big ones. Like I said, nobody's as cute as you guys are, but we have Nurabot, and we have AGIBOT over there. We have LG over here. They just announced a new robot. Caterpillar, they've got the largest robots ever. That one delivers food to your house. That's connected to Uber Eats. And that's Surf Robot. I love those guys. Agility, Boston Dynamics, incredible. You got surgical robots. You got manipulated robots from Franka. You got Universal Robotics robot. Incredible number of different robots.

(01:05:07)

And so this is the next chapter. We're going to talk a lot more about robotics in the future, but it's not just about the robots in the end. I know everything's about you guys. It's about getting there. And one of the most important industries in the world that will be revolutionized by physical AI and AI physics is the industry that started all of us. At NVIDIA, it wouldn't be possible if not for the companies that I'm about to talk to. And I'm so happy that all of them, starting with Cadence, is going to accelerate everything Cadence, CUDA-X integrated into all of their simulations and solvers. They've got NVIDIA physical AIs that they're going to use for different physical plants and plant simulations. You got AI physics being integrated into these systems.

(01:05:57)

So whether it's an EDA or SDA, and in the future, robotic systems, we're going to have basically the same technology that made you guys possible now completely revolutionize these design stacks. Synopsys. Without Synopsys… Synopsys and Cadence are completely, completely indispensable in the world of chip design. Synopsys leads in logic design and IP. In the case of Cadence, they lead physical design, the place and route, and emulation and verification. Cadence is incredible at emulation and verification. Both of them are moving into the world of system design and system simulation. And so in the future, we're going to design your chips inside Cadence and inside Synopsys. We're going to design your systems and emulate the whole thing and simulate everything inside these tools. That's your future. We're going to give… Yeah, you're going to be born inside these platforms. Pretty amazing, right? And so we're so happy that we're working with these industries. Just as we've integrated NVIDIA into Palantir and ServiceNow, we're integrating NVIDIA into the most computationally-intensive simulation industries, Synopsys and Cadence.

(01:07:16)

And today, we're announcing that Siemens is also doing the same thing. We're going to integrate CUDA-X, physical AI, agentic AI, NeMo, Nemotron, deeply integrate it into the world of Siemens. And the reason for that is this. First, we designed the chips, and all of it in the future will be accelerated by NVIDIA. You're going to be very happy about that. We're going to have agentic chip designers and system designers working with us, helping us do design, just as we have agentic software engineers helping our software engineers code today. And so we'll have agentic chip designers and system designers. We're going to create you inside this, but then we have to build you. We have to build the plants, the factories that manufacture you.

(01:08:06)

We have to design the manufacturing lines that assemble all of you, and these manufacturing plants are going to be essentially gigantic robots. Incredible. Isn't that right? I know, I know. And so you're going to be designed in a computer. You're going to be made in a computer. You're going to be tested and valuated in a computer long before you have to spend any time dealing with gravity. I know. Do you know how to deal with gravity? Can you jump? Can you jump? Okay. All right. Don't show off. Okay. So now, the industry that made NVIDIA possible, I'm just so happy that now the technology that we're creating is at a level of sophistication and capability that we can now help them revolutionize their industry.

(01:09:15)

And so what started with them, we now have the opportunity to go back and help them revolutionize theirs. Let's take a look at the stuff that we're going to do with Siemens. Come on.

Speaker 3 (01:09:27):

Breakthroughs in physical AI are letting AI move from screens to our physical world, and just in time, as the world builds factories of every kind for chips, computers, life-saving drugs, and AI. As the global labor shortage worsens, we need automation powered by physical AI and robotics more than ever. This, where AI meets the world's largest physical industries, is the foundation of NVIDIA and Siemens' partnership. For nearly two centuries, Siemens has built the world's industries, and now, it is reinventing it for the age of AI. Siemens is integrating NVIDIA CUDA-X libraries, AI models, and Omniverse into its portfolio of EDA, CAE, and digital twin tools and platforms. Together, we're bringing physical AI to the full industrial life cycle, from design and simulation to production and operations. We stand at the beginning of a new industrial revolution, the age of physical AI built by NVIDIA and Siemens for the next age of industries.

Jensen Huang (01:10:59):

Incredible, right, guys? What do you think? All right. Hang on tight. Just hang on tight. If you look at the world's models, there's no question OpenAI is the leading token generator today. More OpenAI tokens are generated than just about anything else. The second-largest group, the second largest is probably open models. And my guess is that over time, because there are so many companies, so many researchers, so many different types of domains and modalities, that open source models will be by far the largest.

(01:11:38)

Let's talk about somebody really special. You guys want to do that? Let's talk about Vera Rubin. Vera Rubin… Yeah, go ahead. She's an American astronomer. She was the first to observe… She noticed that the tails of the galaxies were moving about as fast as the center of the galaxies. Well, I know it makes no sense. It makes no sense. Newtonian physics would say, just like the solar system, the planets further away from the sun is circling the sun slower than the planets closer to the sun. And therefore, it makes no sense that this happens, unless there's invisible bodies. We call them… She discovered dark body, dark matter, that occupies space, even though we don't see it. And so Vera Rubin is the person that we named our next computer after. Isn't that a good idea? I know.

(01:12:44)

Okay. Vera Rubin is designed to address this fundamental challenge that we have. The amount of computation necessary for AI is skyrocketing. The demand for NVIDIA GPUs is skyrocketing. It's skyrocketing because models are increasing by a factor of 10, an order of a magnitude every single year. And not to mention, as I mentioned, o1's introduction was an inflection point for AI. Instead of a one-shot answer, inference is now a thinking process. And in order to teach the AI how to think, reinforcement learning and very significant computation was introduced into post-training. It's no longer supervised fine- tuning, or otherwise known as imitation learning or supervision training. You now have reinforcement learning, essentially the computer trying different iterations itself, learning how to perform a task.

(01:13:44)

The amount of computation for pre-training, for post-training, for test-time scaling has exploded as a result of that. And now, every single inference that we do, instead of just one shot the number of tokens, you can just see the AIs think, which we appreciate. The longer it thinks, oftentimes it produces a better answer. And so test-time scaling causes the number of tokens to be generated increased by 5x every single year. Not to mention, meanwhile, the race is on for AI. Everybody's trying to get to the next level. Everybody's trying to get to the next frontier. And every time they get to the next frontier, the last-generation AI tokens, the cost starts to decline about a factor of 10x every year. The 10x decline every year is actually telling you something different. It's saying that the race is so intense. Everybody's trying to get to the next level, and somebody is getting to the next level. And so therefore, all of it is a computing problem. The faster you compute, the sooner you can get to the next level of the next frontier.

(01:14:48)

All of these things are simultaneously happening at the same time. And so we decided that we have to advance the state-of-the-art of computation every single year, not one year left behind. And now, we've been shipping GB200s year and a half ago. Right now, we're in full-scale manufacturing of GB300, and if Vera Rubin is going to be in time for this year, it must be in production by now. And so today, I can tell you that Vera Rubin is in full production. You guys want to take a look at Vera Rubin? All right. Come on. Play it, please.

Speaker 3 (01:15:39):

Vera Rubin arrives just in time for the next frontier of AI. This is the story of how we built it. The architecture, a system of six chips, engineered to work as one, born from extreme co-design. It begins with Vera, a custom design CPU, double the performance of the previous generation, and the Rubin GPU. Vera and Rubin are co-designed from the start to bidirectionally and coherently share data faster and with lower latency. Then, 17,000 components come together on a Vera Rubin compute board. High-speed robots place components with micron precision before the Vera CPU and two Rubin GPUs complete the assembly, capable of delivering 100 petaflops of AI, five times that of its predecessor.

(01:16:38)

AI needs data fast. ConnectX-9 delivers 1.6 terabits per second of scale-out bandwidth to each GPU. BlueField-4 DPU offloads storage and security, so compute stays fully focused on AI. The Vera Rubin compute tray, completely redesigned with no cables, hoses, or fans, featuring a BlueField-4 DPU, eight ConnectX-9 NICs, two Vera CPUs, and four Rubin GPUs, the compute building block of the Vera Rubin AI supercomputer.

(01:17:19)

Next, the sixth-generation NVLink switch, moving more data than the global internet, connecting 18 compute nodes, scaling up to 72 Rubin GPUs operating as one. Then, Spectrum-X ethernet photonics, the world's first ethernet switch with 512 lanes and 200 gigabit capable co-packaged optics, scale out thousands of racks into an AI factory. 15,000 engineer years since design began, the first Vera Rubin NVL72 rack comes online. Six breakthrough chips, 18 compute trays, nine NVLink switch trays, 220 trillion transistors, weighing nearly two tons. One giant leap to the next frontier of AI. Rubin is here.

Jensen Huang (01:18:27):

What do you guys think? This is a Rubin pod, 1,152 GPUs and 16 racks. Each one of the racks, as you know, has 72 Rubins. Each one of the Rubins is two actual GPU dies connected together. I'm going to show it to you, but there are several things that… Well, I'll tell you later. I can't tell you everything right away. Well, we designed six different chips. First of all, we have a rule inside our company, and it's a good rule. No new generation should have more than one or two chips change. But the problem is this. As you could see, we were describing the total number of transistors in each one of the chips that were being described, and we know that Moore's law has largely slowed. And so the number of transistors we can get year after year after year can't possibly keep up with the 10 times larger models. It can't possibly keep up with five times per year more tokens generated. It can't possibly keep up with the fact that cost decline of the tokens are going to be so aggressive. It is impossible to keep up with those kind of rates for the industry to continue to advance, unless we deployed aggressive, extreme co-design, basically innovating across all of the chips, across the entire stack, all at the same time, which is the reason why we decided that this generation, we had no choice but to design every chip over again.

(01:20:16)

Now, every single chip that we were describing just now can be a press conference, and all in itself, and there's an entire company who's probably dedicated to doing that back in the old days. Each one of them are completely revolutionary and the best of its kind. The Vera CPU, I'm so proud of it. In a power-constrained world, Grace CPU is two times the performance. In a power-constrained world, it's twice the performance per watt of the world's most advanced CPUs. Its data rate is insane. It was designed to process supercomputers. And Vera was an incredible GPU. Grace was an incredible GPU. Now, Vera increases the single-threaded performance, increases the capacity of the memory, increases everything just dramatically. It's a giant chip.

(01:21:09)

This is the Vera CPU. This is one CPU, and this is connected to the Rubin GPU. Look at that thing. It's a giant chip. Now, the thing that's really special… I'll go through these. It's going to take three hands, I think, four hands to do this. Okay, so this is the Vera CPU. It's got 88 CPU cores, and the CPU cores are designed to be multithreaded, but the multithreaded nature of Vera was designed so that each one of the 176 threads could get its full performance. So it's essentially as if there's 176 cores, but only 88 physical cores. So these cores were designed in using a technology called spatial multithreading, but the I/ O performance is incredible.

(01:22:01)

This is the Rubin GPU. It's 5x Blackwell in floating performance, but the important thing is… Go to the bottom line. The bottom line, it's only 1.6 times the number of transistors of Blackwell. That tells you something about the levels of semiconductor physics today. If we don't do co-design, if we don't do extreme co-design at the level of basically every single chip across the entire system, how is it possible we deliver performance levels that is, at best, 1.6 times each year? Because that's the total number of transistors you have.

(01:22:38)

And even if you were to have a little bit more performance per transistor, say 25%, it's impossible to get 100% yield out of the number of transistors you get. And so 1.6x kind of puts a ceiling on how far performance can go each year, unless you do something extreme, and we call it extreme co-design. Well, one of the things that we did, and was a great invention, it's called NVFP4 Tensor Core. The transformer engine inside our chip is not just a four-bit floating point number somehow that we put into the data path. It is an entire processor, a processing unit that understands how to dynamically, adaptively adjust its precision and structure to deal with different levels of the transformer, so that you can achieve higher throughput wherever it's possible to lose precision and to go back to the highest possible precision wherever you need to.

(01:23:34)

That ability to dynamically do that… You can't do this in software, because obviously it's just running too fast, and so you have to be able to do it adaptively inside the processor. That's what an NVFP4 is. When somebody says FP4 or FP8, it almost means nothing to us, and the reason for that is because it's the Tensor Core structure and all of the algorithms that makes it work. NVFP4, we've published papers on

Jensen Huang (01:24:00):

… this already. The level of throughput and precision it's able to retain is completely incredible. This is groundbreaking work. I would not be surprised if the industry would like us to make this format and this structure an industry standard in the future. This is completely revolutionary. This is how we were able to deliver such a gigantic step-up in performance, even though we only have 1.6 times the number of transistors.

(01:24:29)

And now, once you have a great processing node, and this is the processor node, and inside… So, this is for example… Here, let me do this. This is, wow, super heavy. You have to be a CEO in really good shape to do this job. Okay. All right. So, this thing is, I'm going to guess this is probably, I don't know, a couple of hundred pounds. I thought that was funny. Come on, it could have been. Everybody's gone, "No, I don't think so."

(01:25:19)

All right. So, look at this. This is the last one. We revolutionized the entire NGX chassis. This node, 43 cables, zero cables, six tubes, just two of them here. It takes two hours to assemble this. If you're lucky, it takes two hours. And of course, you're probably going to assemble it wrong, you're going to have to retest it, test it, reassemble it. So, the assembly process is incredibly complicated. And it was understandable as one of our first supercomputers that's deconstructed in this way. This from two hours to five minutes. 80% liquid cooled. 100% liquid cooled. Yeah, really, really a breakthrough.

(01:26:19)

Okay. So, this is the new compute chassis. And what connects all of these to the top-of-rack switches, the east-west traffic is called the Spectrum-X NIC. This is the world's best NIC, unquestionably NVIDIA's Mellanox, the acquisition Mellanox that joined us a long time ago now. Their networking technology for high-performance computing is the world's best, bar none. The algorithms, the chip design, all of the interconnects, all the software stacks that run on top of it, their RDMA, absolutely, absolutely, bar none, the world's best. And now, it has the ability to do programmable RDMA and data path accelerator, so that our partners, like AI labs, could create their own algorithms for how they want to move data around the system, But this is completely world-class, ConnectX.

(01:27:08)

ConnectX-9 and the Vera CPU were co-designed and we never revealed it, never released it until CX9 came along because we co-designed it for a new type of processor. CX8 and Spectrum-X revolutionized how ethernet was done for artificial intelligence. Ethernet traffic for AI is much, much more intense, requires much lower latency. The instantaneous surge of traffic is unlike anything ethernet sees. And so, we created Spectrum-X, which is AI ethernet. Two years ago, we announced Spectrum-X. NVIDIA today is the largest networking company the world has ever seen. So, it's been so successful and used in so many different installations, it is just sweeping the AI landscape.

(01:28:02)

The performance is incredible, especially when you have a 200-megawatt data center or if you have a gigawatt data center, these are billions of dollars. Let's say a gigawatt data center is $50 billion. If the networking performance allows you to deliver an extra 10%, in the case of Spectrum-X, delivering 25% higher throughput is not uncommon. If we were to just deliver 10%, that's worth $5 billion. The networking is completely free, which is the reason why, well, everybody uses Spectrum-X. It's just an incredible thing.

(01:28:41)

And now, we're going to invent a new type of data processing. And so, Spectrum-X is for east-west traffic. We now have a new processor called BlueField-4. BlueField-4 allows us to take a very large data center, isolate different parts of it so that different users could use different parts of it, make sure that everything could be virtualized if they decide to be virtualized. So, you offload a lot of the virtualization software, the security software, the networking software for your north-south traffic. And so, BlueField-4 comes standard with every single one of these compute nodes. BlueField-4 has a second application I'm going to talk about in just a second. This is a revolutionary processor and I'm so excited about it.

(01:29:27)

This is the NVLink 6 switch and it's right here. This switch here, there are four of them inside the NVLink switch here. Each one of these switch chips has the fastest SerDes in history. The world is barely getting to 200 gigabits. This is 400 gigabits per second switch. The reason why this is so important is so that we could have every single GPU talk to every other GPU at exactly the same time. This switch on the back plane of one of these racks enables us to move the equivalent of twice the amount of the global internet data, twice all of the world's internet data at twice the speed. You take the cross-sectional bandwidth of the entire planet's internet. It's about 100 and terabytes per second. This is 240 terabytes per second. So, it puts it in perspective. This is so that every single GPU can work with every single other GPU at exactly the same time.

(01:30:37)

Then on top of that, on top of that… Okay, so this is one rack. This is one rack. Each one of the racks, as you can see, the number of transistors in this one rack is 1.7 times… Yeah, could you do this for me? So, it's usually about two tons, but today it's two and a half tons because when they shipped it, they forgot to drain the water out of it. So, we shipped a lot of water from California. Can you hear it squealing? When you're rotating two and a half tons, you're going to squeal a little. Oh, you can do it. Wow. Okay. We won't make you do that twice.

(01:31:35)

All right. So, behind this are the NVLink spines. Basically, two miles of copper cables. Copper is the best conductor we know. And these are all shielded copper cables, structured copper cables. The most the world's ever used in computing systems ever. And our SerDes drive the copper cables from the top of the rack all the way to the bottom of the rack at 400 gigabits per second. It's incredible. And so, this has two miles of total copper cables, 5,000 copper cables and this makes the NVLink spine possible. This is the revolution that really started the NGX system.

(01:32:18)

Now, we decided that we would create an industry standard system, so that the entire ecosystem, all of our supply chain could standardize on these components. They're some 80,000 different components that make up these NGX systems and it's a total waste if were to change it every single year. Every single major computer company from Foxconn to Quanta, the Wistron, the list goes on and on and on, to HP and Dell and Lenovo, everybody knows how to build these systems. And so, the fact that we could squeeze Vera Rubin into this, even though the performance is so much higher and very importantly, the power is twice as high. The power of Vera Rubin is twice as high as Grace Blackwell.

(01:33:10)

And yet, and this is the miracle, the air that goes into it, the air flow is about the same. And very importantly, the water that goes into it is the same temperature, 45 degrees C. With 45 degrees C, no water chillers are necessary for data centers. We're basically cooling this supercomputer with hot water. It is so incredibly efficient. And so, this is the new rack. 1.7 times more transistors, but five times more peak inference performance, three and a half times more peak training performance. They're connected on top using Spectrum-X. Oh, thank you. This is the world's first manufacturing chip using TSMC's new process that we co-innovated called Coop. It's a integrated silicon photonics process technology. And this allows us to take silicon photonics right to the chip. And this is 512 ports at 200 gigabits per second. And this is the new ethernet AI switch, the Spectrum-X ethernet switch. And look at this giant chip. But what's really amazing, it's got silicon photonics directly connected to it. And lasers come in through here, lasers come in through here. The optics are here and they connect out to the rest of the data center. This I'll show you in a second, but this is on top of the rack and this is the new Spectrum-X silicon photonic switch. And we have something new I want to tell you about.

(01:35:16)

So, just as I mentioned, a couple of years ago, we introduced Spectrum-X, so that we could reinvent the way that networking is done. Ethernet is really easy to manage and everybody has an ethernet stack and every data center in the world knows how to deal with ethernet. And the only thing that we were using at the time was called Infiniband, which is used for supercomputers. InfiniBand is very low latency, but of course the software stack, the entire manageability of InfiniBand is very alien to the people who use ethernet. So, we decided to enter the ethernet switch market for the very first time. Spectrum-X, that just took off and it made us the largest networking company in the world, as I mentioned. This next generation of Spectrum-X is going to carry on that tradition.

(01:36:05)

But just as I said earlier, AI has reinvented the whole computing stack, every layer of the computing stack, it stands to reason that when AI starts to get deployed in the world's enterprises, it's going to also reinvent the way storage is done. Well, AI doesn't use SQL, AI use semantics information. And when AI is being used, it creates this temporary knowledge, temporary memory called KV cache, key value combinations, but it's a KV cache, basically the cache of the AI, the working memory of the AI. And the working memory of the AI is stored in the HBM memory. Every single token, for every single token, the GPU reads in the model, the entire model, it reads in the entire working memory, and it produces one token, and it stores that one token back into the KV cache. And then, the next time it does that, it reads in the entire memory, reads it, and it streams it through our GPU, and then generates another token.

(01:37:14)

Well, it does this repeatedly token after token after token. And obviously, if you have a long conversation with that AI, over time, that memory, that context memory is going to grow tremendously. Not to mention the models are growing, the number of turns that we're using the AIs are increasing. We would like to have this AI stay with us our entire life and remember every single conversation we've ever had with it, right? Every single lick of research that I've asked it for. Of course, the number of people that will be sharing the supercomputer is going to continue to grow. And so, this context memory, which started out fitting inside an HBM, is no longer large enough.

(01:37:49)

Last year, we created Grace Blackwell's very fast memory, we called fast-context memory, and that's the reason why we connected Grace directly to Hopper. That's why we connected Grace directly to Blackwell, so that we can expand the context memory, but even that is not enough. And so, the next solution, of course, is to go off onto the network, the north-south network, off to the storage of the company. But if you have a whole lot of AIs running at the same time, that network is no longer going to be fast enough. So, the answer is very clearly to do it different.

(01:38:28)

And so, we created BlueField-4, so that we could essentially have a very fast KV cache context memory store right in the rack. And so, I'll show you in just one second, but there's a whole new category of storage systems. And the industry is so excited because this is a pain point for just about everybody who does a lot of token generation today, the AI labs, the cloud service providers. They're really suffering from the amount of network traffic that's being caused by KV cache moving around. And so, the idea that we would create a new platform, a new processor to run the entire Dynamo KV cache context memory management system and to put it very close to the rest of the rack is completely revolutionary.

(01:39:18)

So, this is it. It sits right here. So, this is all the compute nodes. Each one of these is NVLink 72. So, this is Vera Rubin NVLink72, 144 Rubin GPUs. This is the context memory that's stored here. Behind each one of these are four BlueFields, behind each BlueField is 150 terabytes of context memory. And for each GPU, once you allocate it across, each GPU will get an additional 16 terabytes. Now, inside this node, each GPU essentially has one terabyte. And now, with this backing store here, directly on the same east-west traffic at exactly the same data rate, 200 gigabits per second, across literally the entire fabric of this compute node, you're going to get an additional 16 terabytes of memory, and this is the management plane. These are the Spectrum-X switches that connects all of them together. And over here, these switches at the end connects them to the rest of the data center. And so, this is the Vera Rubin.

(01:40:50)

Now, there's several things that's really incredible about it. So, the first thing that I mentioned is that this entire system is twice the energy efficiency, essentially twice that the temperature performance in the sense that even though the power is twice as high, the amount of energy use is twice as high, the amount of computation is many times higher than that, but the liquid that goes into it is still 45 degrees C. That enables us to save about 6% of the world's data center power. So, that's a very big deal.

(01:41:26)

The second very big deal is that this entire system is now confidential computing safe, meaning everything is encoded in transit, at rest and during compute and every single bus is now encrypted. Every PCI Express, every NVLink, every NVLink between CPU and GPU, between GPU to GPU, everything is now encrypted. And so, it's confidential computing safe. This allows companies to feel safe that their models are being deployed by somebody else, but it will never be seen by anybody else. And so, this particular system is not only incredibly energy efficient, and there's one other thing that's incredible. Because of the nature of the workload of AI, it spikes instantaneously with this computation layer called all reduce.

(01:42:23)

The amount of current, the amount of energy that is used simultaneously is really off the charts. Oftentimes, it'll spike up 25%. We now have power smoothing across the entire system, so that you don't have to over-provision by 25 times, or if you over-provision by 25 times, you don't have to leave 25% of the energy squandered or unused. And so, now you could fill up the entire power budget and you don't have to provision beyond that.

(01:43:01)

And then, the last thing, of course, is performance. So, let's take a look at the performance of this. These are only charts that people who build AI supercomputers love. It took every single one of these chips, complete redesign of every single one of the systems and rewriting the entire stack for us to make this possible. Basically, this is training the AI model, this first column. The faster you train AI models, the faster you can get the next frontier out to the world. This is your time to market. This is technology leadership. This is your pricing power. And so, in the case of the green, this is essentially a 10 trillion parameter model. We scaled it up from DeepSeek, that's why we call it DeepSeek++, training a 10-trillion parameter model on 100 trillion tokens. And this is our simulation projection of what it would take for us to build the next frontier model.

(01:44:02)

The next frontier model, Elon's already mentioned that the next version of Grok, Grok 5 I think, is seven trillion parameters, so this is 10. And the green is Blackwell. And here, in the case of Rubin, notice the throughput is so much higher, and therefore, it only takes one fourth as many of these systems in order to train the model in the time that we gave it here, which is one month. And so, time is the same for everybody. Now, how fast you can train that model and how large a model you can train is how you're going to get to the frontier first.

(01:44:42)

The second part is your factory throughput. Blackwell is green again, and factory throughput is important because your factory is, in the case of a gigawatt, it's $50 billion. A $50 billion data center can only consume one gigawatt of power. And so, if your performance, your throughput per watt is very good versus quite poor, that directly translates to your revenues. Your revenues of your data center is directly related to the second column. And in the case of Blackwell, it was about 10 times over Hopper. In the case of Rubin, it's going to be about 10 times higher again. Now, the cost of the tokens, how cost-effectively it is to generate the token, this is Rubin about 1/10th, just as in the case of… Yep.

(01:45:48)

So, this is how we're going to get everybody to the next frontier to push AI to the next level. And of course, to build these data centers energy efficiently and cost efficiently. So, this is it. This is NVIDIA today. We mentioned that we build chips, but as you know, NVIDIA builds entire systems now, and AI is a full stack. We're reinventing AI across everything from chips to infrastructure, to models, to applications. And our job is to create the entire stack, so that all of you could create incredible applications for the rest of the world.

(01:46:33)

Thank you all for coming. Have a great CES.

(01:46:36)

Now, before I let you guys go, there were a whole bunch of slides we had to leave on the cutting floor, and so we have some outtakes here. I think it'll be fun for you. Have a great CES, guys.

Video (01:46:55):

And cut.

(01:46:59)

NVIDIA live at CES, take four. Marker.

(01:47:03)

Boom mic. Action.

(01:47:08)

Sorry, guys.

(01:47:10)

Platforms shift, huh? That should do it.

(01:47:18)

And let's roll camera. Ah.

(01:47:22)

Shade of green. A bright, happy green.

(01:47:27)

World's most powerful AI supercomputer you can plug into the wall next to my toaster.

(01:47:37)

Hey, guys, I'm stuck again. I'm so sorry.

(01:47:39)

This slide is never going to work. Let's just cut it.

(01:47:42)

Hello? Can you hear me?

(01:47:47)

So, like I was saying, the router, because not every problem needs the biggest, smartest model. Just the right one.

(01:47:55)

No, no. Don't lose any of them. This new six-chip Rubin platform makes one amazing AI supercomputer.

(01:48:07)

There you go, little guy. Oh, no. No, not the scaling laws.

(01:48:12)

There is a squirrel on the car. Be ready to make the squirrel go away. Ask the squirrel gently to move away.

(01:48:19)

Did you know the best models today are all a mixture of experts?

(01:48:22)

Hey.

(01:48:22)

Where'd everybody go?