Gary (00:00):

… Millions of people rely on every single day. Today, AMD is a central force in the global AI transformation. Its CPUs, GPUs and adaptive computing solutions help unlock new capabilities across cloud, enterprise, Edge, NPC. And while the industry has spent years discussing what AI could become, Lisa has been focused on building the computing foundation that makes AI real and accessible at scale. But what truly distinguishes Lisa is her leadership. She's analytical and deeply technical, yet always grounded in purpose. She brings a rare combination of scientific brilliance, strategic clarity, and human-centered thinking. She believes in partnering deeply to engineer solutions that matter, solutions that advance society, strengthen industries, and expand opportunity.

(00:58)

That's the kind of leadership CES is designed to elevate, and that's the kind of leadership we all need as we navigate a world of accelerated innovation. Tonight, Lisa will share AMD's vision for how high performance computing and advanced AI architectures will transform every part of our digital and physical world, from research, healthcare, and space exploration to education and productivity. She'll speak to the extraordinary pace of AI, the breakthroughs happening now, including the opportunities and responsibilities that come with building the future.

Speaker 2 (01:54):

Hello, and welcome to this unique moment in human history. A moment where what's possible might soon forget what's impossible, where any game you play now has the power to play by your rules. Where AI not only helps model the possibilities of what a city can be, but make sure our kids never forget what our cities used to be. A moment where no hope meets the treatment plans of AI Mac genomes, where no driver officially has better reflexes than any driver who's ever lived, and where no signal can no longer stop you from sharing…

Greg Brockman (02:52):

Show me, show me, show me.

Speaker 2 (02:54):

… This. A moment where AI is helping design a renewable energy source as powerful as the sun itself, and helping make travel turn across the Atlantic just another puddle jumper. But as fast as everything's changing, there's one thing that won't. We're working tirelessly to create a world where the most advanced AI capabilities end up in the right hands. Yours.

Speaker 1 (03:51):

So just keep walking, even though…

Gary (03:58):

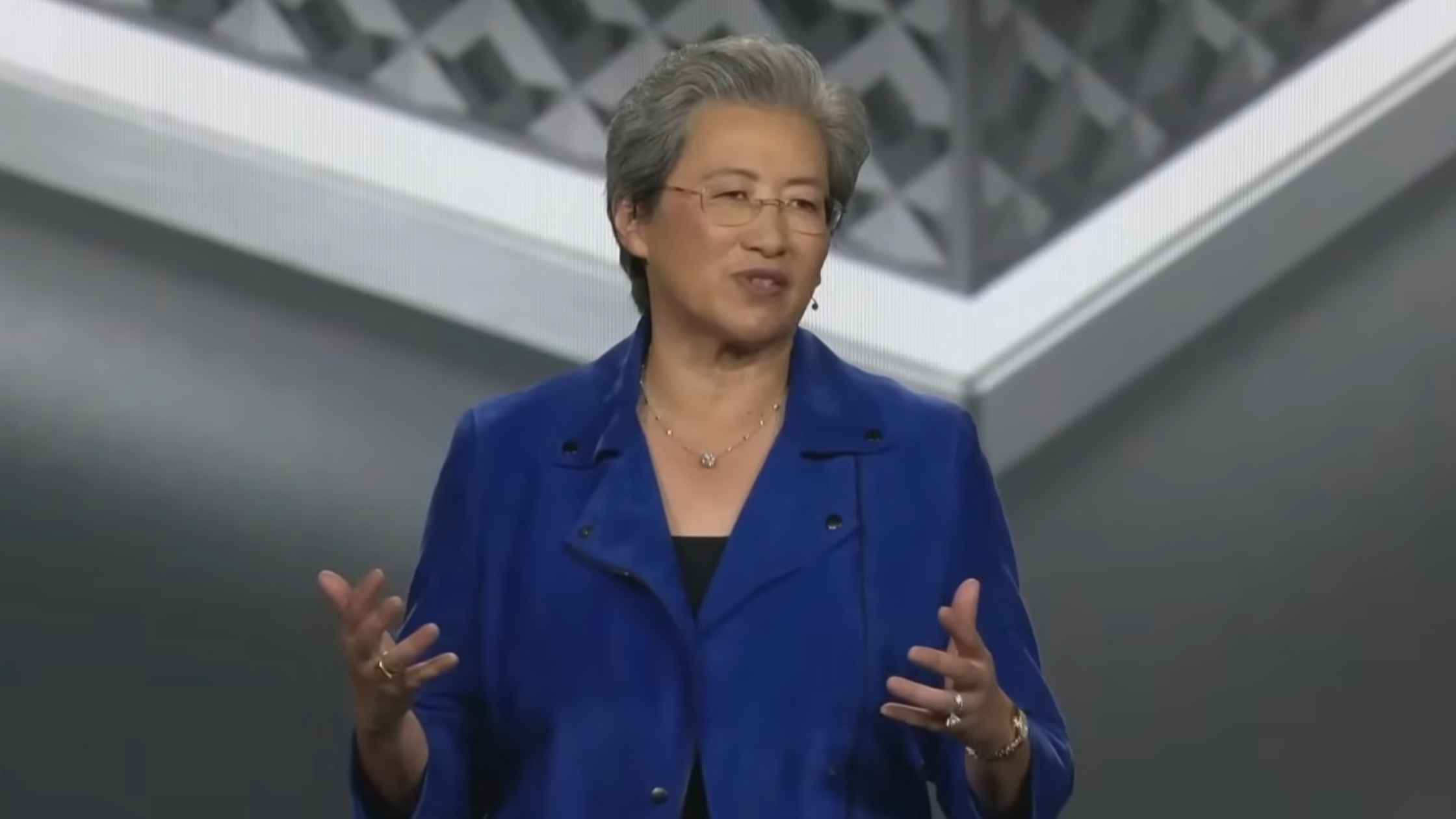

So ladies and gentlemen, it is my privilege to welcome to the stage a globally respected technologist, an industry defining CEO, and a leader whose work continues to shape the very trajectory of modern computing. Ladies and gentlemen, please join me in welcoming to the stage chair and CEO of AMD, Dr. Lisa Su.

Dr. Lisa Su (04:23):

Thank you. Thank you. All right. What an audience. How are you guys doing tonight? That sounds wonderful. First of all, thank you, Gary, and welcome to everyone here in Las Vegas and joining us online. It's great to be here with all of you to kick off CES 2026. And I have to say, every year, I love coming to CES to see all the latest and greatest tech, and catch up with so many friends and partners. But this year, I'm especially honored to be here with all of you to open CES. Now, we have a completely packed show for you tonight, and it will come as no surprise that tonight is all about AI. Although the rate and pace of AI innovation has been incredible over the last few years, my theme for tonight is You Ain't Seeing Nothing Yet.

(05:29)

We are just starting to realize the power of AI. And tonight, I'm going to show you a number of examples of where we're headed, and I'll be joined by some of the leading experts in the world, from industry giants to breakthrough startups. And together, we are working to bring AI everywhere and for everyone. So let's get started. At AMD, our mission is to push the boundaries of high performance and AI computing to help solve the world's most important challenges. Today, I'm incredibly proud to say that AMD technology touches the lives of billions of people every day. From the largest cloud data centers to the world's fastest supercomputers to 5G networks, transportation and gaming, every one of these areas is being transformed by AI. AI is the most important technology of the last 50 years, and I can say it's absolutely our number one priority at AMD. It's already touching every major industry, whether you're going to talk about healthcare or science or manufacturing or commerce, and we're just scratching the surface. AI is going to be everywhere over the next few years, and most importantly, AI is for everyone. It makes us smarter. It makes us more capable. It enables each one of us to be a more productive version of ourselves. And at AMD, we're building the compute foundation to make that future real, for every company and for every person. Now, since the launch of ChatGPT a few years ago, I'm sure we all remember the first time we tried it. We've gone from a million people using AI to now more than a billion active users. This is just an incredible ramp. It took the internet decades to reach that same milestone. Now, what we are projecting is even more amazing. We see the adoption of AI growing to over five billion active users as AI truly becomes indispensable to every part of our lives, just like the cell phone and the internet of today.

(07:44)

Now, the foundation of AI is compute. With all of that user growth, we have seen a huge surge in demand in the global compute infrastructure, growing from about one Zettaflop in 2022 to more than a hundred Zettaflops in 2025. Now, that sounds big. That's actually a hundred times in just a few years. But what you're going to hear tonight from everyone is we don't have nearly enough compute for everything that we can possibly do. We have incredible innovation happening. Models are becoming much more capable. They're thinking and reasoning. They're making better decisions, and that goes even further when we extend that to agents overall. So to enable AI everywhere, we need to increase the world's compute capacity another hundred times over the next few years to more than 10 yottaflops over the next five years. Now, let me take a survey. How many of you know what a yottaflop is?

(08:50)

Raise your hand, please. A yottaflop is a one followed by 24 zeros. So 10 yottaflops is 10,000 times more compute than we had in 2022. There's just never, ever been anything like this in the history of computing, and that's really because there's never been a technology like AI. Now, to enable this, you need AI in every compute platform. So what we're going to talk about tonight is the whole gamut. We're going to talk about the cloud, where it runs continuously delivering intelligence globally. We're going to talk about PCs, where it helps us work smarter and personalize every experience that we have. And we're going to talk about the Edge, where it powers machines that can make real time decisions in the real world. AMD is the only company that has the full range of compute engines to make this vision a reality. You really need to have the right compute for each workload, and that means GPUs, that means CPUs, that means NPUs, that means custom accelerators. We have them all, and each of them can be tuned for the application to give you the best performance as well as the most cost-effective solution. So tonight, we're going to go on a journey. So you're going to go with me through several chapters as we showcase the latest AI innovations across cloud, PCs, healthcare, and much more. So let's go ahead and start with the first chapter, which is the cloud. The cloud is really where the largest models are trained and where intelligence is delivered to billions of users in real time. For developers, the cloud gives them instant access to massive compute, the latest tools, and the ability to deploy and scale as use cases take off. The cloud is also where most of us experience AI today. So whether you're using ChatGPT or Gemini, or Grok, or you're coding with copilots, all of these powerful models are running in the cloud. Now, today, AMD is powering AI at every level of the cloud. Every major cloud provider runs on AMD Epic CPUs, and eight of the top 10 AI companies use Instinct accelerators to power their most advanced models, and the demand for more compute is just continuing to go up. Let me just show you a few graphs. Over the past decade, the compute needed to train the leading AI models has increased more than four times every year, and that trend is just continuing.

(11:35)

That's how we're getting today's models that are dramatically smarter and more useful. At the same time, as more people are using AI, we've seen an explosion over the last two years of inference, growing the number of tokens a hundred times, really hitting an inflection point. You can just see how much that inference is really taking off. And to keep up with this compute demand, you really need the entire ecosystem to come together. So what we like to say is the real challenge is how do we put AI infrastructure at yotta scale, and that requires more than just raw performance. It starts with leadership compute, CPUs, GPUs, networking coming together. It takes an open modular rack design that can evolve over product generations. It requires high speed networking to connect thousands of accelerators into a single unified system, and it has to be really easy to deploy, so we want full turnkey solutions.

(12:41)

That's exactly why we built Helios, our next generation rack scale platform for the yotta scale AI era. Helios requires innovation at every single level, hardware, software, and systems. It starts with our engineering teams, who designed our next generation Instinct MI455 accelerators to deliver the largest generational performance increase we've ever achieved.

(13:10)

MI455 GPUs are built using leading edge two nanometer and three nanometer process technologies and advanced 3D chiplet packaging, with ultra-fast high bandwidth HBM4 memory. This is integrated into a compute tray with our Epic CPUs and Pensando networking chips to create a tightly integrated platform. Each tray is then connected with high speed ultra accelerator link protocol tunneled over ethernet, which enables the 72 GPUs in the rack to function as a single compute unit. And then from there, we connect thousands of Helios racks to build powerful AI clusters using industry standard ultra ethernet mix and Pensando programmable DPUs that can accelerate AI performance even more by offloading some of the tasks from the GPUs. Now, we are at CES. It is a little bit about show and tell. So I am proud to show you Helios right here in Vegas, the world's best AI rack. Is that beautiful or what? Now, for those of you who have not seen a rack before, let me tell you, Helios is a monster of a rack. This is no regular rack, okay? This is a double wide design based on the OCP open rack wide standard developed in collaboration with Meta, and it weighs nearly 7,000 pounds. So Gary, it took us a bit to get it up here, just so you know, but we wanted to show you what is really powering all of this AI. It is actually more than two compact cars. Now, the way we've designed Helios is really working closely with our lead customers, and we chose this design so that we could optimize serviceability, manufacturability, and reliability for next generation AI data centers. Now, let me show you a few other things. At the center of Helios is the compute tray. So let's take a closer look at what one of those trays look like. Now, I can tell you, I probably cannot lift this compute tray, so it had to come out, but let me just describe it a little bit. Each Helios compute tray includes four MI455 GPUs, and they're paired with the next gen epic VENICE CPU and Pensando networking chips, and all of this is liquid cooled so that we can maximize performance. At the heart of Helios is our next generation Instinct GPUs. And you guys have seen me hold up a lot of chips in my career, but today I can tell you I am genuinely excited to hold up this chip. So let me show you MI455X for the very first time.

(16:58)

MI455 is the most advanced chip we've ever built. It's pretty darn big. It has 320 billion transistors, 70% more than MI355. It includes 12 two nanometer and three nanometer compute in IO chiplets and 432 gigabytes of ultra-fast HBM4, all connected with our next gen 3D chip stacking technology. So we put four of these into the compute trays up here. And then driving those GPUs is our next generation Epic CPU, code named Venice. Venice extends our leadership across every dimension that matters in the data center, more performance, better efficiency, and lower total cost of ownership. Now, let me show you Venice for the first time.

(17:55)

I have to say this is another beautiful chip. I do love our chips, so I can say that for sure. Venice is built with two nanometer process technology and features up to 256 of our newest high performance Zen6 scores. And the key here is we actually designed Venice to be the best AI CPU. We doubled the memory and GPU bandwidth from our prior generation. So Venice can feed MI455 with data at full speed, even at rack scale. So this is really about co-engineering, and we tie it all together with our 800 gig ethernet Pensando Volcano and Selena networking chips, delivering ultra-high bandwidth, as well as ultra low latency, so tens of thousands of Helios racks can scale across the data center. Now, just to give you a little bit of the scale of what this means, that means that each Helios rack has more than 18,000 CDNA5 GPU compute units and more than 4,600 Zen6 CPU cores, delivering up to 2.9 exaflops of performance.

(19:09)

E-Track also includes 31 terabytes of HBM4 memory and industry leading 260 terabytes per second of scale up bandwidth and 43 terabytes per second of aggregate scale out bandwidth to move data in and out incredibly fast. Suffice it to say those numbers are big.

(19:29)

When we launch Helios later this year, and I'm happy to say Helios is exactly on track to launch later this year, we expect it will set the new benchmark for AI performance. And just to put this performance in context, just over six months ago, we launched MI355 and we delivered up to 3X more inference throughput versus the prior generation. And now with MI455, we're bending that curve further, delivering up to 10 times more performance across a wide range of models and workloads. That is game changing. MI455 allows developers to build larger models, more capable agents, and more powerful applications, and no one is pushing faster and further in each one of these areas than OpenAI.

(20:23)

To talk about where AI is headed and the work that we're doing together, I'm extremely happy to welcome the president and co-founder of OpenAI, Greg Brockman to the stage. Greg, it is so great to have you here. Thank you for being here. OpenAI truly started all of this with the release of ChatGPT a few years ago, and the progress you've made is just incredible. We're absolutely thrilled about our deep partnership. Can you just give us a picture of where are things today? What are you seeing and how are we working together?

Greg Brockman (21:06):

Well, first of all, it's great to be here. Thank you for having me. ChatGPT is very much the overnight success that was seven years in the making, right? That we started OpenAI back in 2015 with a vision that deep learning could lead to artificial general intelligence to very powerful systems that could benefit everyone. And we wanted to help to actually realize that technology and bring it to the world and democratize it. And we spent a long time just making progress, where year over year that the benchmarks will look better and better, but the first time we had something that was so useful that many people around the world wanted to use it was ChatGPT. And we were just blown away by the creativity and the ways in which people found how to really leverage the models we had produced in their daily lives. And so, just out of curiosity, how many people in the room are ChatGPT users?

Dr. Lisa Su (21:56):

That's pretty much the whole room, Greg, I would just say.

Greg Brockman (22:01):

Yes, thank you. I'm glad to hear it. But very importantly, how many of you have had an experience that was very key to your life or the life of a loved one, whether it's in healthcare, in helping manage a newborn, in any other walk of your life? And to me, that's the metric that we want to optimize. And that seeing that number go up and to the right has been something that has been really different over 2025, right? That we really move from being just a text box that you ask a question, you get an answer, something very simple contained, to people really using it for very personal, very important things in their lives. And it's not just in personal lives, for healthcare and aspects like that. It's also in the enterprise and really starting to bring models like Codex to be able to transform software engineering. And I think that this year we're really going to see enterprise agents really take off.

(22:52)

We're seeing scientific discovery start to be really accelerated, whether it's developing novel math proofs. The first time we saw that was just a couple of months ago and the progress is continuing and it's really across every single endeavor of human knowledge work, where there's human intelligence that can be leveraged, that you can amplify it. We now have an assistant, right? We now have a tool. We have an advisor that is able to amplify what people want to do.

Dr. Lisa Su (23:18):

I completely agree with you, Greg. I think we have seen just an enormous acceleration of what we're using this tech for. Now, I would say, I think every single time I see you, you tell me you need more compute.

Greg Brockman (23:31):

It's true. It's true.

Dr. Lisa Su (23:32):

It's almost like a broken record. You could just play the, "Greg wants more compute." Can you talk about just some of the things that you're seeing in the infrastructure, some of the bottlenecks, and where do you think we should be focusing as an industry?

Greg Brockman (23:47):

Well, the why. Why do we need more compute is the most important question, which is really when the models are not that capable and where we were in 2015, 2016, 2017, so forth is that you basically just wanted to train a model and evaluate it. And maybe there'd be a very narrow task to be useful for. But as we've made this exponential progress on the models, then there's actually exponential utility to them. People want to bring it into their lives in a very scalable way. And I think that what we're seeing is as we move from, you ask a question, you get an answer to agentic workflows, where you ask the model to write some software for you and it goes off for minutes or hours or soon even days. And you're not just operating one agent, you're operating a suite of agents. You can have 10 different work streams all going at once on your behalf for a single developer.

(24:40)

And that it should be the case that you wake up in the morning and this is the kind of thing we are going to build, by the way. And ChatGPT has taken items off your to-do list at home and at work. And that all of that, that's going to require that big graph that you had of how much compute the world's going to need, that's going to require far more compute than we have right now. I would love to have a GPU running in the background for every single person in the world, because I think it can deliver value for them, but that's billions of GPUs. No one has a plan to build that kind of scale. And so, what we're really seeing is benefits in people's lives. We're seeing, for example, some of my favorite applications and some of the ones I think are the most important are in healthcare, that we actually see people's lives being saved through ChatGPT.

(25:23)

Just over the holidays, one of my coworkers that her husband had leg pain. They went to the hospital, they went to the ER and they got it X-rayed, and the doctors are like, "Ah, it's a pulled muscle. Just wait it out. You'll be fine."

(25:37)

They went home, it got a little bit worse, typed the symptoms into ChatGPT. ChatGPT said, "Go back to the ER. This could be a blood clot."

(25:45)

And in fact, it was. It was deep vein thrombosis in the leg in addition to two blood clots on the lungs. And if they just waited it out, that would've been likely fatal. It's not a unique story. Fiji, our CEO of applications who I worked very closely with every day, ChatGPT literally saved her life too. She was in the hospital for a kidney stone and had an infection. They're about to inject a antibiotic and she said, "Wait just a moment."

(26:15)

She asked ChatGPT whether that one was safe for her. ChatGPT, which has all of her medical history, said, "No, no, because you had this other infection two years ago that could re-trigger it and that could actually be life-threatening as well."

(26:27)

And so, she showed it to the doctor. The doctor's like, "Wait, what? You had this condition? I didn't know. I only had five minutes to review your medical history."

Dr. Lisa Su (26:36):

I completely agree, Greg. I mean, that's one of the things that we can always, all of us can use to help her.

Greg Brockman (26:42):

Yes.

Dr. Lisa Su (26:42):

And that's really what we have here. Look, I mean, I think you've painted a vivid picture of why we need more compute, of what we can do with AI. I think we feel exactly the same way. Now, we've also done an incredible amount of work with your engineering teams. MI455 and Helios is actually, a lot of it is through some of the feedback from our engineering teams working closely together. Can you talk a little bit about that infrastructure and what are your customers wanting, and how are you going to use MI455?

Greg Brockman (27:12):

Well, one of the key things with how AI is evolving is thinking about the balance of different resources on the GPU. And so, we have a slide to show how we've seen the evolution of this, the balance of resources across different MI generations. So you see this slide that I very painstakingly put together. Actually, I did not painstakingly put it together. I asked ChatGPT to go create the slide. And so, it literally did all the research, and you can see some sources at the bottom, and it actually went and read a bunch of different AMD materials, created these charts, put together, the title put together all these headers and produced not just an answer for me to then go do a bunch of work. It produced an artifact, an artifact that I can show. And this is just one simple example that you can do today with ChatGPT, that we are moving to a world where you are going to be able to have an agent that does all this work for you.

(28:08)

And for that, we're going to need to have hardware that is really tuned to our applications. What we have in mind is that we're moving to a world where human attention, human intent becomes the most precious resource. And so, that there should be very low latency interaction anytime a human's involved, but there should be an ocean of agentic compute that's constantly running, that's very high throughput. And these two different regimes of low latency and high throughput yield a bunch of different pressures on hardware manufacturers such as yourself. So it's a pleasure to be working together.

Dr. Lisa Su (28:41):

We like building GPUs for you. That works well. Look, lastly, Greg, let's talk a little bit about the future. Paint a picture. One of the things that we've talked about is there's some people out there who are wondering, "Is the demand really there? Can AI compute, do we really need all of this AI compute?" And I know you and I have talked about it. I think people don't have a view of the future that you see. I mean, you have a special seat. So paint the world for what this looks like in a few years.

Greg Brockman (29:10):

Well, looking backwards, we have been tripling our compute every single year for the past couple years, and we've also tripled our revenue. And the thing that we find within OpenAI is every time we want to release a new feature, we want to produce a new model, we want to bring this technology to the world, we have a big fight internally over compute, because there are so many things we want to launch and produce for all of you that we simply cannot, because we are compute constrained. And I think we're moving to a world where GDP growth will itself be driven by the amount of compute that is available in a particular country, in a particular region. And I think that we're starting to see the first inklings of this. And I think over the next couple of years, we'll see it start to hit in a real way.

(29:54)

And I think that AI is something where I think data centers can actually be very beneficial to local communities. I think that's a really important thing for us to really prove to people, but also the AI technology you produce. That is also something where in terms of scientific advances, you think about what has been the most fundamental driver of increase of quality of life. It really is about science. And every time we've gone into specific domains, you just see how much limitation there is from how things are done, because there's a particular discipline that's built with a bunch of expertise. There are a small number of experts and that it's hard for them to propagate that to future generations. For example, in biology, we hooked up GPT-5 to a wet lab setup and had humans described what the wet lab looked like. The model said, "Here are a couple of ideas to try."

(30:41)

The humans would go try it. And it actually produced a 79X, almost a hundred X fold improvement in the efficiency of a particular protocol. And that's just one particular reaction that people have spent some time actually optimizing, but not a ton and ton of time, because it's just there's so much surface area available in biology that no human can possibly get to all of it. No human can be an expert across every single subfield. And I think what we're going to see is AIs that really bridge across disciplines that humanity has been unable to bridge. You see this within healthcare, where as humans learn more, we specialize more, but we're going to have AI is going to amplify. And so, I think it'll be for hard problems that AI will be brought to bear. This will be true for enterprise. Every single application I think will have an agent that is accelerating what people want to do.

(31:28)

And I think the hardest problem for humanity will be deciding how do we use the limited resources we have to get the most benefit for everyone?

Dr. Lisa Su (31:37):

That is an incredible vision. Greg, we are so excited to be working with you. I think there's no question in the world that we have the power to really change people's lives. Thank you for the partnership and really look forward to it.

Greg Brockman (31:51):

Thank you.

Dr. Lisa Su (32:01):

So as you heard from Greg, compute is key and MI455 is a game changer. But with the MI400 series, we've designed a full portfolio of solutions for cloud, enterprise, supercomputing and sovereign AI. At the top is Helios that's built for the leading edge performance, hyperscale training and distributed inference at rack scale. For enterprise AI deployments, we have Instinct MI440X GPUs that deliver leadership training and inference performance in a compact eight GPU server designed for easy use in today's existing data center infrastructure. And for sovereign AI and supercomputing, where extreme accuracy matters the most, we have the MI430X platform that delivers leadership hybrid computing capabilities for both high precision scientific and AI data types. This is something unique that we at AMD do, because of our chiplet technology, we can actually have the

Dr. Lisa Su (33:00):

Right compute for the right application. Now, hardware's only part of the story. We believe an open ecosystem is essential to the future of AI. Time and time again, we've seen that innovation actually gets faster when the industry comes together and aligns around an open infrastructure and shared technology standards. And AMD is the only company delivering openness across the full stack. That's hardware, software, and the broader solutions ecosystem. Our software strategy starts with ROCm. ROCm is the industry's highest performance open software stack for AI. We have day zero support for the most widely used frameworks tools and model hubs, and it's also natively supported by the top open source projects like PyTorch, vLLM, SGLang, Hugging Face, and others that are downloaded more than a hundred million times a month and run out of the box on instinct, making it easier than ever for developers to build, deploy, and scale on AMD. One of the exciting AI companies using AMD and ROCm to power their models is Luma AI. Please join me in welcoming the Luma AI CEO and co-founder Amit Jain to the stage.

Amit Jain (34:24):

Hey, Lisa.

Dr. Lisa Su (34:28):

Hello, Amit. How are you? It's great to have you here with us. You're doing some incredible work in video generation and multimodal models. Can you tell us a little bit about Luma and what you're doing?

Amit Jain (34:37):

Absolutely. Lisa, thank you so much for having me here.

Dr. Lisa Su (34:40):

Of course.

Amit Jain (34:41):

Luma's mission is to build multimodal general intelligence so AI can understand our world and help us simulate and improve it. Most AI video and image models today, they're in early, early stages and they're used to generate pixels. They're used to produce pretty pictures. What is needed in the world are more intelligent models that combine audio, video, language, image altogether. So, at Luma, we are training these systems that simulate physics, causality, are able to go out, do research called tools, and then finally render out the results in audio, video, image, text, whatever is appropriate for the information that you're trying to work with. In short, we are modeling and generating worlds. So, as an example, let me show you some results from our latest model, Ray3. By the way, Ray3 is the world's first reasoning video model. So, what it means is it actually is able to think first in pixels and latents and decide whether what it's about to generate is good. And it's also the world's first model that can generate in 4K and HDR. So, please take a look.

Speaker 3 (35:55):

When you close your eyes, what do you see? When we talk about reality, how do you know what is real? Or what is simply your imagination? Now open your eyes.

Dr. Lisa Su (36:59):

I could say, Amit, that looked pretty incredible. So, tell us, how are customers using Ray3 today?

Amit Jain (37:07):

So, we are working with very large enterprises as well as individual creators across the spectrum. And we work with them in advertising, media, entertainment, and industries where you want to tell your story. 2025 was the year when they started to deploy our models and experiment with them. And towards the end of it, we are seeing large scale deployments where people are using our models for as much as actually making a 90-minute feature length movie. What customers are also asking us a lot for as they're using it more and more is control and precision. How can they get their particular vision out onto the screen? And what we have realized, and to our research, is that control comes from intelligence, not just better prompts. You can't keep typing in again and again and actually do those things. So, we have built a whole new model on top of Ray3 called Ray3 Modify that allows you to edit the world.

(38:03)

So, let me show you actually what that looks like. This won't have audio and I'm going to tell you a little bit about what you're seeing. So, what's playing on the screen is a demo of Ray3's world editing capabilities. It can take any real or AI footage, footage from cameras or footage that you generated and change it as little or as much as you want to realize the creative goals. It's a powerful system that we have developed for our most ambitious customers who are most demanding and they spread the gamuts across entertainment, advertising. And this has allowed us to enable a new era of hybrid human AI productions. The human becomes the prompt through motion, timing and direction, like you act it out and then the model can produce it. What that means in practice is that filmmakers and creators can create entire cinematic universes now without elaborate sets and then edit and modify anything to get to the result they want. This has never been possible before, but in 2026, we are focused on actually going much further. 2026 will be the year of agents where AI will be able to help you to accomplish more of the task or hopefully the full end to end of the task rather than doing some patchwork. So, our teams have been working diligently building the world's most powerful multimodal agent models. Using Luma will suddenly feel like you have a large team of capable creatives who are working with you in your creative pursuit. I want to show you a brief demo of what that would feel like.

(39:38)

So, what you're seeing here is a new multimodal agent that can take a whole script of ideas with characters and everything and start imagining that in front of you. Now, this is not script to a movie. This is human AI interaction and our next generation of models provide the ability to analyze multiple frames, long form video, and make selection and maintaining the fidelity of the character's scenes and story, and only editing when it's needed. Here you're seeing human and AI collaborate in designing characters, environments, shots, and the whole world. And with our agents, we believe that creatives will be able to make entire stories what used to take a large production before. Again, this has never been possible before, and we have been using it heavily internally, and we couldn't be more excited. Individual creatives or small teams will suddenly have the power of doing what entire Hollywood studios do.

Dr. Lisa Su (40:33):

That's pretty amazing. It's really nice to see how these Luma agents come together and make this happen. Now, I know you have a lot of choices in compute. And when we first started talking, actually, you called me and said you needed compute, and I said, "I thought I could help." Can you tell us a bit about why you chose AMD and what has your experience been?

Amit Jain (40:55):

Yeah, we bet on AMD very early on. That call was in 2024, early 2024. And since then, our partnership has grown into a large scale collaboration between our teams, so much so that today 60% of Luma's rapidly growing inference workloads actually run on AMD cards. So, initially when we started out, we used to do a bunch of engineering, but today we are at a point where most any operators, most any workloads that we can imagine run out of the box on AMD. And this is huge props to your software teams and the diligent work that is going into the ROCm ecosystem. We are building multimodal models and actually these workloads are very complex compared to text models.

(41:39)

One example of that is these consumes hundreds of times, thousands of times more tokens. A video that you saw, a 10-second video, is about 100,000 tokens easily. Compared that to a response from an LLM, it's about 200 to 300 tokens. So, when we are working with this much information, TCO and inference economy is absolutely critical to our business, otherwise there's no way to serve all the demand that is coming our way. Through our collaboration with the AMD team, we have been able to achieve some of the best TCO, total cost of ownership, that we have ever seen in our stack.

(42:17)

And we believe as we build these more complex models that are able to do auto-regressive diffusion and that are able to do text and image and audio and video, all at the same time, this collaboration will allow us to significantly differentiate on cost and efficiency, which as you know in AI is a big deal. So, through this collaboration, we have developed such degree of confidence that in 2026, we are expanding our partnership to a tune of about 10 times what we have done before. And these 455 cards … And I cannot be more excited for MI455X because the rack scale solution and the memory and the infrastructure that you're building is essential for us to be able to build these world simulation models.

Dr. Lisa Su (43:04):

Well, we love hearing that, Amit. And look, our goal is to deliver you more powerful hardware. Your goal is to make it do amazing things. So, just give us a little brief view of what do you see customers doing over the next few years that just isn't even possible today?

Amit Jain (43:20):

Right. So, as Greg was mentioning, early on in LLM land, in 2022, 2023, they were great for writing copies, small emails, things like that. We could have never imagined that we would actually put these models into real-time systems, into healthcare and these kind of things. Through accuracy and architecture and scaling, LLMs have now gotten to that point. Video models are currently in that early stage. Today they're great for generating video and pretty pictures, but soon by scaling these models up, by improving the accuracy and data, we would end up at a place where these models will help us simulate real physical processes in the world like CAD, architecture, fluid flows, help us design entire rocket engines, plan cities.

(44:09)

And this is not outrageous. This is what we do today manually with big giant teams in simulation environments. These models will allow us to do that and automate that to a great degree. And as they become more and more accurate, multimodal models is what we need for the backbone of general purpose robotics. Your home robot will run hundreds of simulations in its head in image and video and then try out like, okay, how do I do this? How do I solve this? So, that it's able to do a lot more than current generation of LLM and VLM robots are able to do. This is how the human brain works. Humans are natively multimodal. Our AI systems will be as well.

Dr. Lisa Su (44:50):

That sounds wonderful, Amit. Look, thank you so much for being here today. Thank you for the partnership, and we really look forward to all that you're going to do next.

Amit Jain (44:58):

Thank you so much.

Dr. Lisa Su (44:58):

Thank you. So, you've heard from Greg and Amit, what they said is they need more compute to build and run their next gen models, and it is the same across every single customer that we have, which is why the demand for compute is growing faster than ever. Now, meeting that demand means continuing to push the envelope on performance far beyond where we are today. MI400 series was a major inflection point in terms of delivering leadership training across all workloads, inference, scientific computing, but we are not stopping there. Development of our next gen MI500 series is already well underway. With MI500, we take another major leap in performance. It's built on our next gen CDNA 6 architecture, manufactured on two nanometer process technology and uses higher speed HBM4e memory. And with the launch of MI500 in 2027, we're on track to deliver 1000X increase in AI performance over the last four years, making more powerful AI accessible to all.

(46:15)

So, with that, let's … Thank you. So, with that, now let's shift from the cloud to the devices that make AI more personal, PCs. So, for decades, the PC has been about a powerful device helping us be more productive, whether at work or at school. But with AI, the PC has become not just a tool, but it's a powerful, essential part of our lives as an active partner. It learns how you work and it adapts to your habits and can help you do things faster than you've ever expected, even when you're offline. AI PCs are starting to deliver real value across a wide range of everyday tasks, from content creation and productivity to intelligent personal assistance. Let's just take a look at of a few of the AI PC applications today. Starting with content creation, these videos were created from simple text prompts on a Ryzen AI Max PC, so not in the cloud, but in a local environment.

(47:32)

Anyone can generate professional quality photos and videos in minutes with no design expertise. Microsoft has been a key enabler of AI PCs, helping bring next generation capabilities directly into our productivity tools. For example, managing your meetings, summarizing meetings, summarizing emails, quickly finding files that you need, using real time translation on video conferences. And with Microsoft Copilot, advanced AI capabilities are being built directly into the Windows experience to complete tasks faster. You just describe what you need and the PC takes it from there.

(48:11)

Now, at AMD, we saw the AI PC wave early and we invested. That's why we've led every inflection point. We were the first to integrate a dedicated on chip AI engine in 2023 and the first to deliver Copilot+ X86 PCs in 2024. And with Ryzen AI Max, we created the first single chip X86 platform that could run a 200 billion parameter model locally. And now we're extending that leadership again with our next gen Ryzen AI notebook and desktop processors. So, today I'm proud to announce the new Ryzen AI 400 series, the industry's broadest and most advanced family of AI PC processors. Ryzen AI 400 combines up to 12 high performance processors. Ryzen AI 400 combines up to 12 high performance …

(49:39)

Now, powering the next generation of AI PC experiences takes more than just hardware. It takes smarter software with models that are lighter, faster, and can run directly on device. These are different than what you're seeing in the cloud. So, to talk more about this next wave of model innovation, please welcome Ramin Hassani, co-founder and CEO of Liquid AI. Ramin, it's great to have you here. I'm very excited about the work that you guys are doing at Liquid. You were really taking a different approach to models. Can you talk a little bit to the audience about what Liquid is doing and why it's different from others?

Greg Brockman (50:34):

Absolutely, Lisa. It is great to be here. We are a foundation model company spun out of MIT two and a half years ago. Powerful, fast, and processor optimized generative models. The goal is to substantially reduce the computational cost of intelligence from first principles without sacrificing quality. That means Liquid models deliver frontier model quality right on a device. Device could be a phone, could be a laptop, could be a robot, could be a coffee machine, and it could be an airplane. Basically, anywhere compute exists with three value propositions, privacy, speed, and continuity. It can work seamlessly across online and offline workloads.

Dr. Lisa Su (51:49):

Ramin, our teams have been working really closely on bringing more capable models to AI PCs. Can you share a bit about that work?

Greg Brockman (51:58):

Absolutely. Today I've got two new product announcements. One, we are excited to announce Liquid Foundation Models 2.5, the most advanced tiny class of models on the market. At only 1.2 billion parameters, the model performs best on instruction following capabilities between its class and models that are larger in its class. LFM 2.5 instances are the building blocks of reliable AI agents on any device. To put this in perspective for you, this model delivers instruction following capabilities better than the DeepSeq models and Gemini Pro kind of models, Gemini 2.5 Pro right on the device. We are releasing five model instances, a chat model, an instruct model, a Japanese enhanced language model, a vision language model, and a lightweight audio model, audio language model, basically. These are highly optimized for AMD, Ryzen AI, CPUs, GPUs, and NPUs. And today they're available for download on Hugging Face and on our own platform Leap. There you can enjoy them.

Dr. Lisa Su (53:13):

That's pretty cool.

Greg Brockman (53:20):

So, we can stack these LFM 2.5 instances together to build agentic workflows, but then it would be really amazing if we can bring in all these modalities into one place. So, that brings me to my second announcement, LFM3. LFM3, it is designed natively multimodal to process text, vision, and audio as input and deliver audio and text as an output in 10 different languages with sub hundred millisecond latency for audiovisual data. You will get LFM3 later in the year.

Dr. Lisa Su (53:56):

All right, that's fantastic. So, now, Ramin, help our audience understand why should they be so excited about LFM3? What can we do with these models on an AI PC?

Greg Brockman (54:11):

Absolutely. So, most assistants, AI assistants, copilots today are reactive agents. You open an app, then you ask a question, it responds. But when the AI is running fast on the device and is always on, it can be working on the tasks proactively for you. The task can be done in the background. So, let me show you a quick demo, a reference design to inspire what is possible to build on PCs with LFM instances. Let's jump in. Imagine you're a sales leader working on your AMD Ryzen laptop with LFM3 backbone proactive agents activated. You're in full focus mode, working on a spreadsheet, notifications start piling up, you get a calendar notification for a sales meeting, but want to continue your work in deep focus. A Liquid proactive agent notices the meeting and offers you join on your behalf. You allow the agent to join and while you focus on your data analysis task, in the background, the meeting is in progress with your agent representing you.

Dr. Lisa Su (55:17):

Are you sure we can trust this agent?

Greg Brockman (55:19):

I think.

Dr. Lisa Su (55:21):

I'm a little worried there, Ramin.

Greg Brockman (55:24):

This system can actually transcribe more than transcribing your systems and really … functionality. So, with the Deep Research functionality, you can analyze every email and draft the response for you. Again, everything under your own control. This is not going to go rogue. Everything is offline locally on the device. So, this system can deliver a summary and can do the jobs better than what you have expected, what you have seen from reactive agents. I think this year is going to be the year of proactive agents. And I'm very excited to announce that we are working, we're collaborating with Zoom to bring these features to the Zoom platform, actually.

Dr. Lisa Su (56:20):

That's fantastic. Ramin, we're really excited about what you're doing. I think you've just given people just a glimpse of what we can do when we bring true AI capability to our PCs. So, thank you. We're excited and we look forward to all we're going to do together.

Greg Brockman (56:43):

Thank you so much. Thank you for having me. Thank you.

Dr. Lisa Su (56:50):

Thank you. So, now you've seen a little bit about what's possible with local AI, but the latest PCs aren't just running AI apps, they're actually building them. That's why we created Ryzen AI Max, the ultimate PC processor for creators, gamers, and AI developers. It's the most powerful AI PC platform in the world with 16 high performance Zen5 CPU cores and 40 RDNA 3.5 GPU compute units and an XDNA2 NPU delivering up to 50 TOPS of AI performance, all connected by a unified memory architecture that supports up to 128 gigabytes of shared memory between the CPU and GPU. In premium laptops, Ryzen AI Max is significantly faster in both AI and content creation applications compared to the latest MacBook Pro. In small form factor workstations, Ryzen AI Max delivers comparable performance to at much lower price than NVIDIA's DGX Spark, generating up to 1.7 times more tokens per second per dollar when running the latest GPT OSS models.

(58:02)

And because Ryzen AI Max supports both Windows and Linux natively, developers maintain full access to their preferred software environment, tools, and workflows. Now, there are more than 30 Ryzen AI Max systems in market today, with new laptops, all in-ones and compact workstations launching at CES and rolling out throughout the year. But our mission is to advance AI everywhere for everyone. The truth is, there are AI developers, many of you in this room, who want access to platforms that enable you to develop on the fly. So, we took this one step further. Today, I'm excited to announce the AMD Ryzen AI Halo, a new reference platform for local AI deployment. Now, I would say this is pretty beautiful. Do you guys agree?

Speaker 4 (58:59):

Yes.

Dr. Lisa Su (59:01):

So, let me tell you what it is. This is the smallest AI development system in the world, capable of running models with up to 200 billion parameters locally, not connected to anything. It's powered by our highest end Ryzen AI Max processor with 128 gigabytes of high speed unified memory that is shared by CPU, GPU, and NPU. This architecture accelerates system performance and makes it possible to efficiently run large AI models on a compact desktop PC that fits in your hand. Thank you.

(59:39)

Halo supports multiple operating systems natively, shifts with our latest ROCm software stack, comes preloaded with the leading open source developer tools and runs hundreds of models out of the box. And this really gives developers everything you need to build, test, and deploy local agents and AI applications directly on the PC. Now, for all of you who are wondering, Halo is launching in the second quarter of this year, and we can't wait for folks to get their hands on them.

(01:00:18)

So, now let's turn to the world of gaming and content creation. A few gamers out there. I think there are a lot of gamers out there. Look, every day gamers and creators rely on AMD across Ryzen and Radeon PCs, Threadripper workstations and consoles from Sony and Microsoft to deliver tens of billions of frames. And while the visual quality of those frames has advanced dramatically over the years, the way we build those worlds really hasn't.

(01:00:48)

It still takes teams months or even years to bring a 3D experience to life. Now, AI is really starting to change that. To show what's next in 3D world creation, I'm honored to introduce one of the most influential figures in AI. Known as the godmother of AI, her work has transformed how machines see and understand the world. Please welcome the co-founder and CEO of World Labs, Dr. Fei-Fei Lee.

Fei-Fei Li (01:01:17):

Thank you.

Dr. Lisa Su (01:01:28):

Fei-Fei, we are so excited to have you here. You've been one of the leaders shaping AI for decades. Can you just give us a little bit of your perspective? Where are we today and why did you start World Labs?

Fei-Fei Li (01:01:42):

Yeah. First of all, thank you, Lisa, for inviting me to be here. Congratulations to all the announcement. I can't wait to use some of them. So, it's true that there has truly been great breakthroughs in AI progress in the past few years. And as you said, I've been around the block for a while for more than two decades, and I really cannot be more excited than now by where things are going. So, in the past few years, language-based intelligence in AI technology really has taken the world by storm. We're seeing the proliferation of all kinds of capabilities and applications. But the truth is, there's a lot more than just language intelligence. Even for us humans, there's more than passively looking at life in the world. We are incredible spatial intelligent animals, and we have profound capabilities that use our own spatial intelligence that connects perception with action.

(01:02:47)

Think about all of you being here, how you brave through airports this morning, I'm one of them, or woke up in your hotel room and get to the nice coffee shop, or find your way this maze in Vegas to be here. All this requires spatial intelligence. So, what excites me is that there's now a new wave of gen AI technology for both embodied AI and generative AI that we can finally give machines something closer to the human level spatial intelligence. It's the ability to not only perceive but create 3D or even 4D worlds, reason about objects and people, and imagine entirely new environments that still obey the laws of physics and dynamics in worlds, virtual or real. So, that's why I started World Labs. I really want to bring spatial intelligence to life and deliver value to people.

Dr. Lisa Su (01:03:53):

I remember the first time I talked to you about your concept for World Labs and your passion about what this could bring. Tell us a little bit about what your models do so the audience gets a feel for what does this really mean.

Fei-Fei Li (01:04:06):

Yeah. Well, I heard that there are gamers out there, so this is very exciting. So, traditionally building 3D scenes requires laser scanners or calibrated cameras or handbuilt models using pretty sophisticated and complicated software. But at World Labs, we're creating a new generation of models that can use the recent Gen AI technology to learn the structure, not only just flat pixel structure. I'm talking about 3D, 4D structure of the world directly from data, a lot of data. So, give the model a few images and even one image. The model itself can fill in the missing details, predict what's behind objects and generate rich, consistent, permanent, navigable 3D worlds. So, what you're seeing here on screen is a hobbit world that's created by our World Labs model called Marble.

(01:05:15)

We just give it a handful of images and it created these 3D scenes that are persistent and you can navigate. You can even see a top view. And our system transformed a few visual inputs into a fully navigable, expansive 3D world. And it shows how these models not just reconstruct the environment, they really imagine cohesive worlds, wondrous worlds. And once these worlds exist, they flow together and allowing effortless transition from one environment to the next and scaling into something much larger. And this is much closer to how humans

Fei-Fei Li (01:06:00):

… humans pieced together a place from a few glances.

Dr. Lisa Su (01:06:04):

Well, it looks pretty amazing that you can do that with such little input. Now, can you just show us a little bit about how the technology works?

Fei-Fei Li (01:06:13):

Yes, definitely. Let's just ground it in real world a bit more from the Hobbit world. Let's do something that you're very familiar with. Over the break our team went to AMD's Silicon Valley office. I hope they got your permission.

Dr. Lisa Su (01:06:32):

They did not, but that's okay.

Fei-Fei Li (01:06:34):

Okay. Well, now here we are. We just use some regular phone cameras. There's no special equipment. Just phones to capture a few images. And then we put them into World Labs' 3D generative model called Marble. And then our model that can use AMD's MI30025X chip and ROCm software stack can create a 3D version of that environment, including windows, doors, furniture, size and sense of depth and scale. And keep in mind, you're not looking at photos, you're not looking at videos, you're looking at truly 3D consistent worlds. And then our team started to have a little more fun and decided to-

Dr. Lisa Su (01:07:30):

You decided to remodel?

Fei-Fei Li (01:07:31):

Exactly. For free for you, for different design styles. I don't know which one you guys like the most. I personally really like the Egyptian one, but maybe that's because I'm going there in a few months. And while this transformation is keeping the geometric consistency and the 3D inputs, so you can imagine this can be such powerful tools for many use cases, whether you're doing robotic simulation or game development or design, what would traditionally take months to do in a typical workload, we really could do it in minutes now.

(01:08:19)

And we can even navigate into an entirely different world like actually the Venetian hotel. And we just did that yesterday by taking a picture and then put it in the model and then had a little fun. And then it turned this whole place into a 3D imaginative space. And now I'm sure you guys can take pictures and send it to Marble and experience this yourself. But what you don't see here behind the scene is how much computation is happening and why inference speed really matters. The faster we can run these models, the more responsive the world becomes. Instant camera moves, instant edits, and a scene that stays coherent as you actually navigate and explore. And that's what's really important.

Dr. Lisa Su (01:09:20):

Fei-Fei, I think you're going to have a few people going out to your website to try Marble this evening.

Fei-Fei Li (01:09:24):

We'll keep the server up.

Dr. Lisa Su (01:09:27):

But look, that looked really amazing. Can you just share a little bit about your experience working with AMD and our work on Instinct and ROCm?

Fei-Fei Li (01:09:36):

Yeah, of course. And even though we are old friends, our partnership is relatively new. And I got to be honest, I'm very impressed by how quickly this came together. Part of our model is a real-time frame, generative frame model. It was running on MI30025X in under just a week. And then with AMD Instinct and ROCm, our teams were able to iterate really rapidly over a course of a few weeks to improve performance by more than fourfold. And that was really impressive. That matters because spatial intelligence is fundamentally different from what came before. TTAI to understand and navigate 3D structure, have motion, understand physics, requires enormous memory, massive parallelism and very fast inference. And I was seeing your announcement, I can't wait to see platforms like MI450 continue to scale and they will give us the ability to train larger world models and just as importantly, to run them fast enough that these environments can feel alive, react instantly as the user or agents move, explore, interact, and create.

Dr. Lisa Su (01:11:04):

No, that's wonderful. Thank you for those comments. Your team has been fantastic working together.

Fei-Fei Li (01:11:09):

Thank you.

Dr. Lisa Su (01:11:11):

Fei-Fei, with all the compute performance that we're going to give you and all of the innovation in your models, give the audience a view of what to expect over the next few years.

Fei-Fei Li (01:11:21):

Yeah, I know. And as you know me, I don't like to hype. I think the world-

Dr. Lisa Su (01:11:27):

This is what they call under hype, Fei-Fei.

Fei-Fei Li (01:11:30):

No, I think we should just share what it is. It is going to be a changing world. A lot of workflows, a lot of things that were difficult to do will actually go through a revolution because of the incredible technology. For example, creators can now experience and create real world scenes, scenes in real time, shaping what's in their mind's eye, experimenting with the space, the light, the movement, as if they are sketching inside a living world. And the intelligent agents, whether it's robots or vehicles or even tools, can learn inside very rich physics aware, digital worlds before they even need to be deployed into the real one, making them much safer, make the development much faster, more capable and more helpful to people. And designers, for example, or architects can walk through ideas before anything is built, exploring form, flow, materials, and navigate spaces rather than just looking at, staring at static plans.

(01:12:51)

What excites me most is that this represents a shift in how AI shows up in our lives. We're moving from systems that understand words and images passively, to systems that not only understand, but can help us to interact with the world. Lisa, what we are sharing today, which is turning a handful of images or photos into coherent explorable world in real time is not a glimpse of the distant future anymore, it is really the beginning of the next chapter. You and I talked about this even offline, we know as powerful as AI technology is, it's also our responsibility to deploy and develop it in ways that reflect true human values, that augment human creativity, productivity, and our care for each other, while keeping people firmly at the center of this story, however powerful technologies are. And I'm very excited to partner with AMD and with you on this journey.

Dr. Lisa Su (01:14:13):

Fei-Fei, I think I speak up for everyone. You are really an inspiration to the AI world. Congratulations on all the great progress and thank you for joining us tonight.

Fei-Fei Li (01:14:23):

Thank you, Lisa.

Dr. Lisa Su (01:14:32):

Next shift, now let's turn to the world of healthcare.

Speaker 5 (01:14:50):

Of all the ways AI is advancing the world, healthcare impacts us all, and AMD technology is enabling the incredible to become possible. Cancer detection is happening earlier with supercomputers analyzing data at massive scale. Patients are receiving therapy sooner with compute modeling complex biological systems. Promising treatments are moving forward faster through molecular simulation. Medicine is becoming more personalized through genome research, and patient outcomes are improving through robot-assisted surgeries. Our partners are using AI to accelerate science and better human health. Advanced by AMD.

Dr. Lisa Su (01:15:52):

Look, as you saw in that video, AMD technology is already at work across healthcare. This is one of the most meaningful applications you've already heard about some of the stories tonight of how high performance computing and AI is one of the areas that I am most personally passionate about is how you can bring healthcare there. There's nothing more important in our lives than our health and the health of our loved ones, and using technology to improve healthcare outcomes means we measure progress in terms of lives saved. I'm very happy to be joined tonight by three experts who are leading the way in applying AI to real world healthcare challenges. Please join me in welcoming Sean McClain, CEO of Absci, Jacob Thaysen, CEO of Illumina, and Ola Engkvist, head of molecular AI at AstraZeneca. All right, guys. Thank you so much for being here. You can see there's a lot of excitement about healthcare. Thank you for the tremendous partnership. Sean, at Absci, you're using generative models and synthetic biology to design new drugs from scratch. Can you walk through a little bit of how that works?

Greg Brockman (01:17:13):

Yeah. Thank you so much for having us here today. Biology is hard. It's complex. It's messy. Drug discovery and development is this archaic way of going about discovering drugs. Ultimately, it's this trial and error process where you ultimately are searching for a needle in the haystack, but with generative AI and what we're doing at Absci, you're actually able to start creating that needle and being able to actually engineer in the biology that you want, being able to go after the diseases that have large unmet medical need, and being able to have the manufacturability, the developability that you want in the drug. We're actually able to start having precision engineering now because of AI with biology. Just like Apple is engineering an iPhone or you all are engineering the 455s, we're able to start engineering biology. And what is that actually doing? It's allowing us to start tackling some of the hardest, most challenging diseases that still exist that have high unmet medical need where standard of care is poor. And at Absci, this is exactly what we want to tackle. We want to tackle these hard, challenging diseases.

(01:18:37)

Two of them that we're focused on at Absci is androgenetic alopecia, so think common baldness. We actually have the opportunity in the not too distant future to have AI cure baldness. Wouldn't that be incredible? And not only that, be able to focus in areas that have been neglected, women's health. For far too long women's health has been pushed aside, and we have a drug that we are developing for endometriosis that affects one in 10 women with the opportunity to potentially deliver a disease modifying therapy for these women. This is what AI and drug discovery is all about. And this wouldn't be possible without the compute partnership that we've had with AMD. Lisa, you and Mark Papermaster invested in Absci roughly a year ago. And within that year we've been able to scale the inference and be able to, in a single day, screen over a million drugs in one single day. That's incredible. And additionally, we're getting onto the 355s and the memory there is going to allow us to contextualize the biology in a way that we haven't been able to before and ultimately create better models for drug discovery.

(01:20:07)

The future is really bright in AI and drug discovery.

Dr. Lisa Su (01:20:11):

That's fantastic, Sean. Well, look, thank you for the partnership. We're really excited about all the work we're doing together. Now, Jacob, Illumina is really a leader in reading and understanding the human genome to improve health. How is AI helping in your work? And talk a little bit about what the impact is for the future of precision medicine.

Jacob Thaysen (01:20:31):

Absolutely. And I'm super excited to be here and we definitely share a deep passion for impacting health, so looking forward to everything we can do, the two companies together, both what we have done and what we're going to do. And of course, Sean, I'm rooting for that drug again both. Sure. Let me talk a little bit about Illumina. We are the world leader in DNA sequencing, and DNA, as you know, is the blueprint of life, which makes all of us unique. And therefore it's essential to be able to measure that for prevent, diagnose, and treat diseases. In a simplified way, you can think about the human genome as three billion letters, so that actually is like a book with 200,000 pages in, and that is in each of our cells. Now, if there's just one mistake, a spelling mistake in that book, that can actually mean the difference between a long and healthy life and a short and terrible life, so accurate DNA sequencing is extremely important, but is super data and compute intensive. In fact, we are generating in our sequences more data than is generated on YouTube every day.

(01:21:43)

And therefore, the relationship with AMD is super important. We are using your FPGA and Epic processes in our sequences every day, and that's the only way we can compute all that and translate that into insights. Over the past decade our technology has already now been used, as we talked about in drug discovery, but also impacting healthcare. Today it's used for profiling terrible diseases like cancer and inherited diseases, and it's really very important to make sure and we are impacting a lot of people's health out there and have saved millions of people's lives, but we're just getting started, but biology is super complex. And our brain can't really comprehend all that, but the combination of using generative AI, genome, proteomics together is priced to completely change our understanding on biology over the next period of time. It will impact drug discovery, but it will also impact on how we prevent and treat early diseases, so really it will change our way we think about longevity and healthier life.

(01:22:51)

And we can only do that with the collaboration between us and all of us on the stage and the whole ecosystem, so I'm really excited about that.

Dr. Lisa Su (01:22:57):

That's fantastic, Jacob. And Ola, at AstraZeneca, you're scaling AI across one of the largest drug discovery pipelines there is. Talk about how AI is changing the way you develop new medicines.

Ola Engkvist (01:23:09):

Thanks, Lisa. And also thanks for the invitation. At AstraZeneca we really apply AI end to end from early drug discovery, to manufacturing, to healthcare delivery. And for us AI is not only about productivity, it's a lot about innovation. How can we work in a different way? How can we do new things with AI? And one area that I'm personally very passionate about is how can we deliver candidate drugs quicker with the help of generative AI? How we are working there is that we train our generative AI model on all our experimental data that we have generated over several decades. And then we use those models to assess, virtually assess in the computer which hypothesis, which ideas or candidate drugs might work or not. And then we can assess millions of different potential candidate drugs. And then we take the best, only the one that we think is really good into the experimental lab and really validate the hypothesis there.

(01:24:19)

We use our generative AI model to generate candidate drugs, to modify them, to optimize, to really reduce the number of experiments we need to do in the laboratory. And we are applying a new way of working through the whole AstraZeneca small model pipeline and we see that we can deliver candidate drugs 50% faster with the new way of working and also improve clinical success later. And we can't do that alone. We need to do that in a collaboration, so we collaborate with academia, with AI startup and with companies like AMD. And one very important area for us is hyperscaling because we have a lot of great data and we really want to create the most optimal, best models we can. And there we work in a collaboration with AMD to basically scale our drug discovery and in seminar flow so we can handle this large new data set, so basically we optimize the whole workflow with the help of AMD.

Dr. Lisa Su (01:25:25):

That's fantastic, Ola. Look, all of your stories are really amazing and we're thrilled to be working with you to bring these things to life. Now, let's wrap up and think about what's the one thing each of you are most excited about when it comes to how AI will improve healthcare? And maybe Jacob, we'll start with you.

Jacob Thaysen (01:25:42):

Yeah, I'm just excited about the time we are in. This is the first time that you have technology that can create massive amount of data, the first time you have the compute power and the generative AI models. That will truly change our understanding on, as I mentioned before, biology that will be translated into huge impact on healthcare.

Dr. Lisa Su (01:26:01):

Ola?

Ola Engkvist (01:26:02):

I think with AI, we can really transform the understanding of biology so we can go to not only to treat diseases, but we should have the ambition as a community that in the future it can prevent chronic health diseases.

Dr. Lisa Su (01:26:19):

That's fantastic. Sean, bring us home.

Greg Brockman (01:26:22):

Absolutely, so to riff a little bit on what Ola said, I want to live in a world where we can interact with people before they get sick, where we can provide drugs and treatments to allow them to continue to live their healthy life, where they're metabolically healthy, they have a full head of hair and they have that vitality that we all look for. Being able to go from sick care, to preventative care, to ultimately regenerative biology and medicine, where aging no longer is linear. That's the world that I want to live in that AI is going to help us create. It's an exciting time.

Dr. Lisa Su (01:27:19):

I think we can all say, Sean, we are super inspired. I mean, look, this is what I heard. We should expect AI should help us predict sickness, prevent sickness, and personalize treatments such that we can really extend lives. And you guys are really at the forefront of it, so it is our honor to be your partner. Thank you each for joining us today. And we look forward to really moving this frontier forward over the next few years together. Thank you.

Greg Brockman (01:28:01):

Amazing. Thank you.

Jacob Thaysen (01:28:01):

Thank you.

Ola Engkvist (01:28:01):

Thank you.

Dr. Lisa Su (01:28:02):

All right. Now we're entering the world of physical AI. This is where AI enters the real world powered by high performance CPUs and leadership adaptive computing that enables machines to understand their surroundings and take action to achieve complex goals. At AMD we've spent more than two decades building the foundation of physical AI. Today AMD processors power factory robots with micron level precision, guides systems that inspect infrastructure as it's being built, and enables less invasive surgical procedures that speed recovery times, and we're doing it together with a broad ecosystem of partners. Physical AI is one of the toughest challenges in technology. It requires building machines that seamlessly integrate multiple types of processing to understand their environment, make real-time decisions, and take precise action without any human input, and all of this is happening with no margin for error.

(01:29:02)

Delivering that kind of intelligence takes a full stack approach, high performance CPUs for motion control and coordination, dedicated accelerators to process real-time vision and environmental data, and with an open software ecosystem, developers can move fast and seamlessly across platforms and applications. Now, seeing is believing, so to show how some of this work is unlocking the next generation of robotics, please welcome CEO and co-founder of Generative Bionics, Daniele Pucci, to the stage. Hello, Danny. It's great to have you. Your team is doing some amazing work. Can you just give us some background about what you're doing?

Daniele Pucci (01:29:52):

Lisa, Generative Bionics is the industrial spinout of more than 20 years of research in physical AI and biomechanics at detail and east of technology. But when we look back, actually, everything started from a simple but profound question. If an artificial agent needs to understand the human world, doesn't it need a human like body to experience it? To answer this we built some of the most advanced human platforms in the world. ICAP for cognitive research, then ergoCub for safe industrial collaboration, and then we built iRonCub, the only jet-powered flying humanodropothy in the world. Throughout the process of building these robots, however, Lisa, there has been one belief that has never changed, the real work in technology is that one that amplifies human potential, and that is built around people, not the other way around. Now, this belief has become the mission of generative bionics, but to make it real, we need computers that is fast, deterministic and local.

(01:31:01)

A human, for instance, touch balances safety loops cannot wait for the cloud. That's why our collaboration with AMD is so fundamental. AMD in fact gives us a unified continuum from embedded edge platforms such as reason AI embedded and Versal AI edge running physical AI on the robot to AMD CPUs and GPUs, powering simulation, training, and large scale development. Lisa, one computer architecture from one partner end to end.

Dr. Lisa Su (01:31:34):

I like that. I like that a lot. Now, look, let's talk a little bit about your philosophy and approach to how are you building these things and what are your use cases?

Daniele Pucci (01:31:45):

We think, Lisa, that now humanoid robots have to be elevated to another level, so our approach to physical AI is to build a platform around the humanoid robot. And then the platform is designed to achieve human level intelligence, safe physical human robot interaction and engineered into real products. Now, let's start from the robot here, we are really inspired by biomechanics. In fact, if you look at human movements, they rely on fast reflexes. We walk by falling forward and our nervous system basically exploits our biomechanics, so we are exploiting the same principles into our humanoid robots. Then humans basically learn also through touch, which is our primary source of intelligence, so we believe that humanoid robots really need the sense of touch. And finally, let's talk about the platform.

(01:32:40)

We are developing an open platform around the humanoid robot to enable the next generation of humanoid robots. Just to give an example, the same tactile sensors that we used for the humanoid robot, we are basically used also into a sensorized shoe that is being used in healthcare to help patient recover better and faster. But more importantly, the shoe acts as another robot sensor so that the robot has the feeling of whether or not and how to help the patient. Lisa, we are not building a robot… We are not only building a robot, we are not only building a product, we are building basically a platform to close the loop between humans and humanoid robots and enabling what we call human-centric physical AI.

Dr. Lisa Su (01:33:30):

That's super cool, Danny. Now, we are at CES and people like to see things, so what exciting news do you have for us?

Daniele Pucci (01:33:39):

Lisa, we focused on a new product identity and our first human drop design basically that defines our DNA in terms of products, GENE.01. And I'm really happy to say that GENE.01 today has been ready to be released right now.

Dr. Lisa Su (01:33:56):

Is this gorgeous or what? Danny, tell us about GENE.01.

Daniele Pucci (01:34:54):

Our vision is a future where humans remain at the center supported by technology. That's why we focus on building humanoid robots that people can trust and accept. For us, acceptability means beauty, grace, and safety. GENE.01 is Italian by design.

Dr. Lisa Su (01:35:13):

Is it really Italian?

Daniele Pucci (01:35:14):