Heavy AI Users Face 3x More Hallucinations and Spend 10x Longer to Get Answers

Survey insights show heavy AI users face 3x more hallucinations, take 10x longer for satisfaction, and struggle most with AI prompting despite their experience.

In the three years since ChatGPT's debut, AI prompting has gone from an experimental novelty to an everyday staple. But using it frequently and using it well are two very different things. For example, the same question can get wildly different answers depending on how it's phrased.

To understand how people are navigating the gap, we surveyed over 1,000 artificial intelligence users across different generations, industries, and skill levels about their habits, challenges, and AI results.

The results revealed a few surprising truths: fast answers aren't always better, experience isn’t always a good thing, and the gap between casual and heavy users is wider than you might think.

Key Takeaways

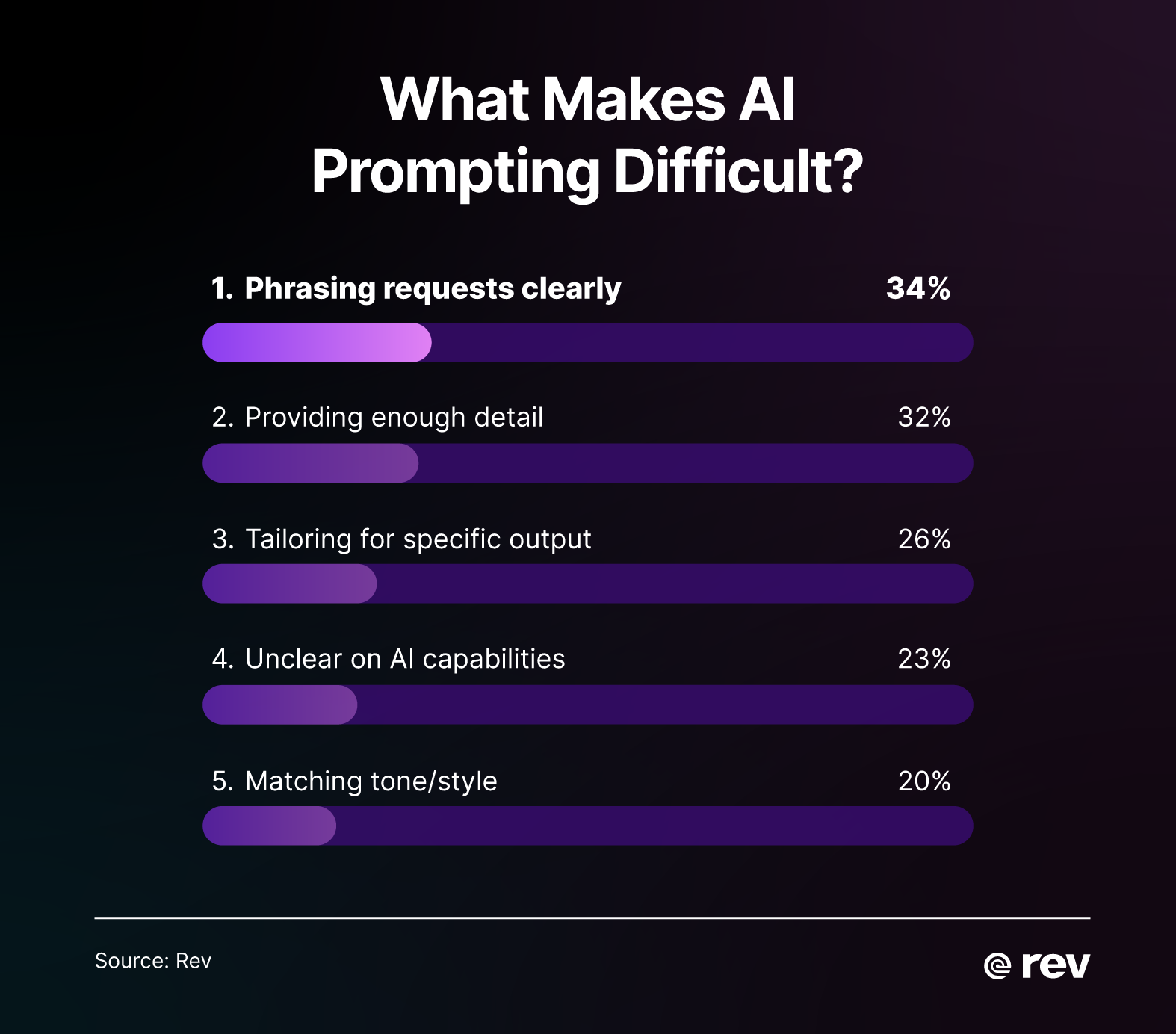

- A third (34%) of all users say phrasing prompts clearly is their top challenge.

- Heavy AI users are nearly 3x more likely to experience frequent hallucinations.

- Daily AI users are 14x more likely than casual users to double-check AI's work.

- Long prompts are 32x more likely to trigger frequent revisions.

- People who feel they're getting better at AI prompting are 64% more likely to say they never experience hallucinations.

Power Users Take 10x Longer To Be Satisfied With AI Outputs

For most people, using AI is quick — 77% get an answer they like in under two minutes. But for the power users who live in these tools for over six hours a week, that number drops to just 50%.

Notably, power users are 10x more likely than casual users to find themselves tweaking, rewriting, and wrestling with AI for over 11 minutes until they get an answer they're satisfied with (21% vs. just 2% of light users).

This isn't necessarily tied to inefficiency — complexity is the likely culprit. Heavy users are trying to do much harder things, like getting the AI to perform an in-depth analysis or perfectly mimic a specific writing style. Meanwhile, light users usually stick to simple requests that lead to quick victories.

Of course, it doesn't help that power users have higher standards — once you know how impactful the output can be, it's tough to settle for just "good enough."

Here's a breakdown of the data we uncovered on AI prompt lengths:

- Prompt length preference: 47% of all users prefer two to three sentence prompts, while 25% stick to single sentences

- Generational differences: 81% of baby boomers use one to three sentences for their prompts vs. 60% of Gen Z

- Longer prompt usage: Gen Z users write multi-paragraph prompts more than twice as often as boomers (34% vs. 15%)

Baby boomers reach satisfaction within two minutes slightly more often than Gen Z (79% vs. 74%) and report fewer hallucinations (21% never experience them vs. 13% of Gen Z). This suggests that brevity may be a quiet superpower (or that some users simply aren't checking the AI's work too closely).

1 in 3 Say the Top Prompting Challenge Is Phrasing Requests Clearly

For all the hype about how AI responds to natural language, in reality, a third of users find it easier said than done.

34% of respondents pointed to "phrasing requests in a way the AI understands" as their biggest AI hurdle, ahead of other common stumbling blocks like knowing the right level of detail to provide (32%), or tailoring instructions to get the specific output they're looking for (26%).

You'd think practice would make perfect, but the data show the opposite. Users who spend over six hours each week with AI tools are far more likely to get stuck on phrasing than casual users (42% vs. 33%). And this isn't a one-off issue.

Despite their experience, these power users reported 7% more prompting problems across the board. Casual users, on the other hand, are 1.5x more likely to have trouble grasping what the AI is actually capable of.

Age-related patterns add another layer of complexity, as each generation is prone to their own, unique challenges:

- Gen Z users struggle most with matching tone and style in AI prompting (35% report this as their top challenge).

- Millennials wrestle with providing the right level of detail in their AI prompts (33% cite this issue).

- Gen X users have the most trouble phrasing requests in a way AI understands as their biggest prompting challenge (33%).

- Boomers also have the most difficulty with phrasing their request in a way the AI understands (34%).

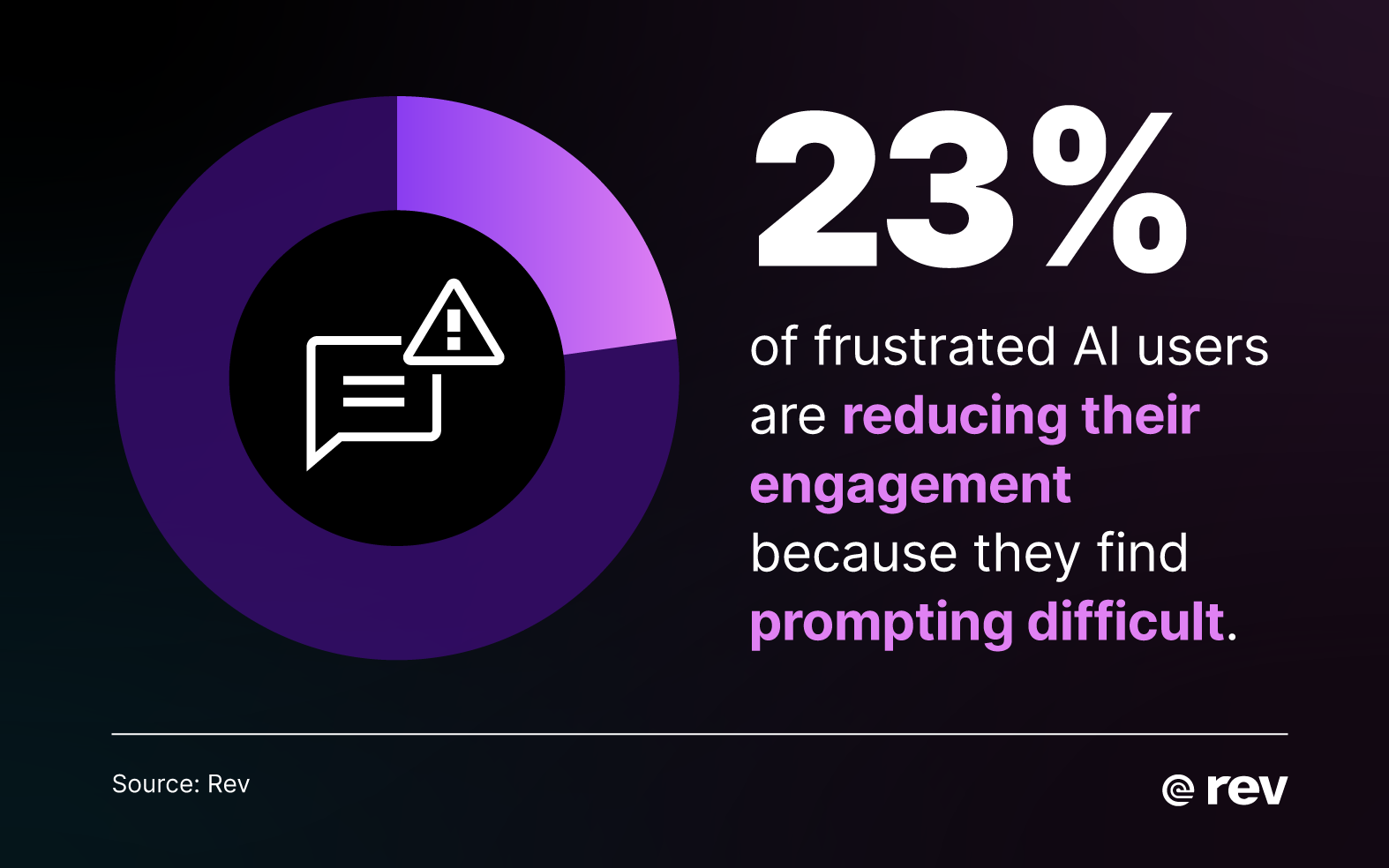

Simplicity might be an undervalued AI prompting strategy. More than 1 in 4 respondents who are frustrated with AI (28%) say their top challenge is "having to rewrite the same prompt multiple times" — compared to just 17% of efficient users.

These frustrated users also experience hallucinations 25% more often and are more likely to jump off of AI altogether because prompting feels too difficult (23% vs. an 18% baseline).

17% Never Have To Revise AI Outputs for Accuracy

It turns out AI hallucinations aren't a rare glitch — they're a regular annoyance for most users.

According to our survey, only 17% of people said they never have to rewrite their prompts to correct for false or inaccurate information. If so many people are constantly having to rewrite prompts to get an answer they like, it begs the question of whether AI is really as helpful as it’s advertised to be.

Because of this, daily AI users have learned to be cautious, with only 1% admitting they don't double-check the AI's work. Compare that to casual monthly users, where 14% don't check, and among rare AI users, that figure reaches 24%. Basically, the more reps you have, the less you take outputs at face value.

The More You Use AI, the More Skeptical You Learn to Be

Here's a surprising insight from our data: the more you use AI, the more flaws you see.

This doesn't necessarily mean tools are failing advanced users. Instead, it tells us that experienced users are either asking tougher questions or have just gotten much better at noticing when the AI gets something wrong.

Here are some key findings at a glance:

- Those who feel they're getting better at writing AI prompts are 64% more likely to never experience hallucinations compared to those who feel it's taking more effort (18% vs. 11%).

- 45% of those who get a satisfactory AI response in under one minute say that hallucination revisions are "rare."

- When a session drags past 20 minutes, almost 9 out of 10 (88 %) say they "very often" have to revise for hallucinations.

- Heavy users run into frequent hallucinations nearly three times than casual users (34% vs. 12%).

- People using very long prompts (e.g., entire documents or web pages, multi-source context, continual updating) are 32× more likely to revise for hallucinations "very often." (32 % vs. 1% for one‑sentence prompts)

- Only 11% of large‑input users ever get a perfect result on the first try compared to 19% for short prompts.

The takeaway is that longer, open-ended sessions and huge walls of text often expose the model's limits—or simply give users more surface area to notice errors. If you want good AI results, and you want them fast, keep your prompts short and to the point, and be prepared to tweak them a few times.

ChatGPT Dominates 51% of Market Share

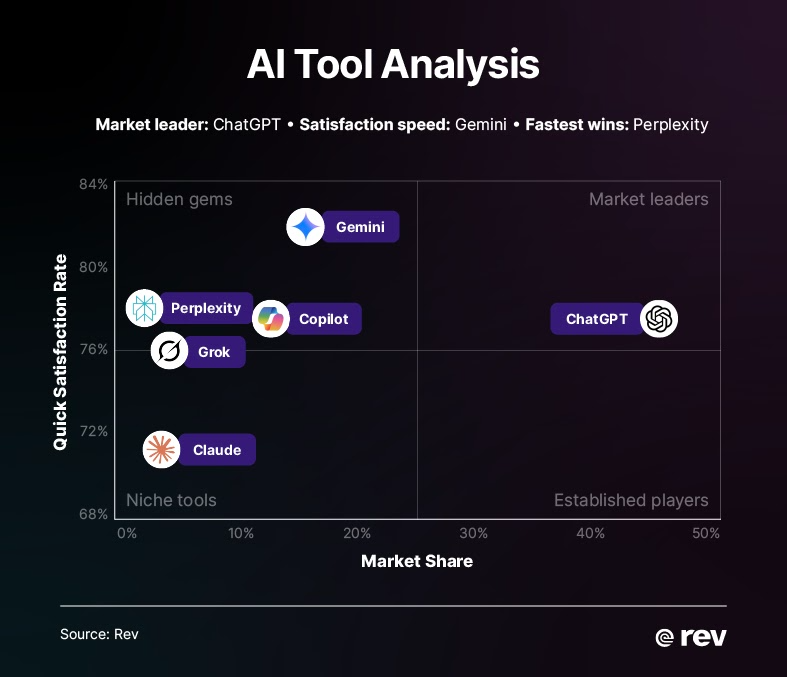

With 700 million weekly users, it's no surprise that ChatGPT is king, and over half (51%) of all survey respondents say it's the AI tool that best understands their prompts.

Its closest competitor, Gemini, lags far behind at 16%. That lead spans every generation, but the gap narrows with age. Nearly three-quarters of Gen Z (72%) prefer ChatGPT, compared to just over a third of baby boomers (35%).

Diving deeper into this age gap, it seems younger users are also more willing to shop around. In contrast, older users tend to pick one tool and stick with it. More than a quarter of boomers (28%) admit they haven't tried enough AI tools to compare, something only 8% of Gen Z would say.

ChatGPT has also cornered the market on casual users. Nearly half (48%) of its fans use AI for less than an hour a week. But power users are starting to look elsewhere. Out of all Gemini users, for instance, 14% use it for over six hours a week. And among Perplexity's very small user base (1% market share), a third are on it multiple times a day.

Which AI Platform Gives The Best AI Results?

So which tool is actually the best? It depends on what you need. ChatGPT is seen as the most reliable all-rounder, reporting the fewest hallucinations. However, Gemini actually edges it out slightly in quick satisfaction rates (49% vs. 46%).

Additionally, Perplexity is the fastest AI tool to deliver a satisfying response, averaging just 1.9 minutes — the only platform with a sub-two-minute average.

However, only 54 seconds separates the fastest (Perplexity) from the slowest (Microsoft Copilot), showcasing just how competitive the market really is.

Rev Can Help You Say It Right

AI doesn't reward the loudest voice — it rewards the clearest one. Our survey shows that across generations and experience levels, people who feel confident in their prompting see faster AI results and avoid common frustrations like rewrites and hallucinations.

If you want to move past trial and error, Rev's Multi-File Insights is designed to help you get more from your AI interactions. Unlike traditional AI prompting, where you're working with a single response, Rev's Insights lets you find answers across all your files at once.

Whether you're compiling meeting notes, analyzing legal transcripts, or extracting common themes from a month's worth of interviews, Insights pulls the key information together for you, saving you hours and helping you get to the right answer, faster.

Methodology

The survey was conducted by Centiment for Rev. The survey was fielded between July 25 and July 28, 2025. The results are based on 1,038 completed surveys. In order to qualify, respondents were screened to be residents of the United States, over 18 years of age, and have used a prompt to ask AI a question. Data is unweighted, and the margin of error is approximately +/-2% for the overall sample with a 95% confidence level.